Matching sets: aggregates vs. first miss

Comments enabled. I *really* need your comment

From Stack Overflow:

Here is my schema:

Suppliers sid sname address

Parts pid pname color

Catalog sid pid cost I need to find the sids of suppliers who supply every red part or every green part.

This task requires matching the sets.

We need to compare two sets here: the first one is the set of the parts of given color; the second one is the set of parts provided by a given supplier. The former should be the subset of the latter.

Unlike other engines, MySQL does not provide the set operators like EXCEPT or MINUS that allow to check the subset / superset relationship very easily. We have to use the record-based solutions.

There are two ways to check that:

First miss

technique: test each record from the subset candidate against the superset candidate, returningFALSEif there is no match.Aggregate

technique: compare the number of records in the subset candidate to the number of records in their intersection. If the numbers are equal, the sets match

Let's test which way is faster in which cases. To do this, we will need some sample tables:

Read the rest of this entry »

Sargability of monotonic functions: example

In my previous article I presented a proposal to add sargability of monotonic functions into the SQL engines.

In a nutshell: a monotonic function is a function that preserves the order of the argument so that it gives the larger results for the larger values of the argument. It is easy to prove that a B-tree with each key replaced by the result of the function will remain the valid B-Tree and hence can be used to search for ranges of function results just like it is used to search for ranges of values.

With a little effort, a B-Tree can also be used to search for the ranges of piecewise monotonic functions: those whose domain can be split into a number of continuous pieces with the function being monotonic within each piece (but it may be not monotonic and even not continuous across the pieces).

In this article, I'll demonstrate the algorithm to do that (implemented in pure SQL on PostgreSQL).

I will show how the performance of simple query

SELECT * FROM t_sine WHERE SIN(value) BETWEEN 0.1234 AND 0.1235

could be improved if the sargability of monotonic functions had been implemented in the optimizer.

Read the rest of this entry »

Things SQL needs: sargability of monotonic functions

I'm going to write a series of articles about the things SQL needs to work faster and more efficienly.

With these things implemented, most of the tricks I explain in my blog will become redundant and I'll finally have some more time to spend with the family.

Ever wondered why a condition like this:

WHERE TRUNC(mydate) = TRUNC(SYSDATE)

, which searches for the current day's records, is so elegant but so slow?

Of course this is because even if you create an index on mydate, this index cannot be used.

The expression in the left part of the equality is not a mydate. The database engine cannot find a way to use an index to search for it. It is said that this expression is not sargable.

Now, a little explanation about the indexes and sargability. If you are familiar with these, you can skip this chapter. But beware that this chapter is the only one illustrated, so skipping it will make the article too boring to read.

Ahem.

To locate a record in a B-Tree index, the keys of the index should be compared to the value being searched for.

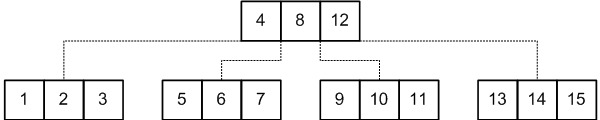

Let's consider this sample B-Tree index:

Tags in nested sets: efficient indexing

Comments enabled. I *really* need your comment

Answering questions asked on the site.

Travis asks:

I just read your excellent post: Hierarchical data in MySQL: parents and children in one query.

I am currently trying to do something very similar to this. The main difference is, I am working with data that is in a nested set.

I would like to construct a query that will return a resultset of nodes matched with

LIKE, the ancestors of each of these nodes, and the immediate children of each of these nodes.

This is a very interesting question: it allows to demonstrate the usage of three types of indexes usable by MyISAM tables: BTREE, SPATIAL and FULLTEXT.

Nested sets is one of two models that are most often used to store hierarchical data in a relational table (the other one being adjacency list model). Unlike adjacency list, nested sets does not require ability to write recursive queries, which SQL originally lacked and still lacks now in MySQL (albeit it can be emulated to some extent). It is widely used in MySQL world.

I described both methods and their comparison in the article I wrote some time ago:

The main problem with the nested sets model is that though it is extremely fast with selecting descendants of a given node, it is very slow in selecting ancestors.

This is because of the way the B-Tree indexes work. They can be used to query for values of a column within the range of two constants, but not for the values of two columns holding a single constant between them. One needs the first condition to select the children (found between the lft and rgt of the given node), and the second condition to select the ancestor (with lft and rgt containing the lft and rgt of the given node).

That's why selecting the children is fast and selecting the ancestors is slow.

To work around this, the sets that form the hierarchy can be described as geometrical objects, with the larger sets containing the smaller sets. These sets can be indexed with a SPATIAL index which is designed specially for this purpose and both children and ancestors can be queried for very efficiently.

Unfortunately, finding the depth level is quite a hard task for the nested sets model even with the SPATIAL indexes.

It would be quite an easy task is MySQL supported recursion: we could just run a query to find the siblings of each record by skipping their whole domains recursively.

However, MySQL's recursion support is very limited and it relies on the session variables, which are not recommended to use in the complex queries.

To cope with this, we need to mix the nested sets and the adjacency list models. Hierarchy will be stored in two seemingly redundant ways: the unique parent and the LineString representing the nested sets.

This will help us to use the R-Tree index to find all ancestors of a given node and also use B-Tree index to find its immediate children.

Finally, the question mentions using LIKE to find the initial nodes. LIKE predicate with the leading wildcards is not sargable in MySQL. However, it seems that the leading wildcards are only used to split the words. In this case, a FULLTEXT index and the MATCH query would be much more efficient, since FULLTEXT index allows indexing a single record with several keys (each one corresponding to a single word in the column's text), so a search for the word in the space separated or a comma separated list uses the index and is much faster than scanning the whole table.

Hence, the query would use all three main types of indexes: BTREE, SPATIAL and FULLTEXT.

To illustrate everything said above, let's create a sample table:

Read the rest of this entry »

SQL Server: EXCEPT ALL

Answering questions asked on the site.

myst asks:

I have two really large tables with lots of columns, many of them are nullable

I need to remove all rows from

table1which are not present intable2, but there can be duplicates in both tables and writing more than 70IS NULLconditions would be a pain, and I want to make sure there's nothing I'm missing.Is there a more simple way?

SQL Server supports EXCEPT clause which returns all records present in the first table and absent in the second one. But this clause eliminates duplicates and cannot be used as a subject to a DML operation.

ANSI SQL standard describes EXCEPT ALL which returns all records from the first table which are not present in the second table, leaving the duplicates as is. Unfortunately, SQL Server does not support this operator.

Similar behavior can be achieved using NOT IN or NOT EXISTS constructs. But in SQL Server, IN predicate does not accept more than one field. NOT EXISTS accepts any number of correlated columns, but it requires extra checks in the WHERE clause, since equality operator does not treat two NULL values as equal. Each pair or nullable columns should be additionally checked for a NULL in both fields. This can only be done using OR predicates or COALESCE, neither of which adds to performance.

But there is a way to emulate EXCEPT ALL in SQL Server quite elegantly and efficiently.

Let's create two sample tables:

Read the rest of this entry »

Index search time depends on the value being searched

Comments enabled. I *really* need your comment

Answering questions asked on the site.

Daniel asks:

I have a table which stores track titles in a

VARCHAR(200)field. The field is indexed, but searching for titles beginning with a letter Z is noticeably slower than for those beginning with A, and the closer the initial letter is to Z, the slower is the query.My understanding is that a full table scan occurs, but

EXPLAINshows that the index is used. Besides, the table is quite large but the query is still reasonably fast.Could you please explain this behavior?

MySQL stores its indexes in B-Tree data structures.

The Wikipedia link above explains the structure quite well so I won't repeat it. I'll rather draw a picture similar to the one in the article:

This picture is quite self-explanatory. The records are sorted in a tree order, so if you are searching for a certain value, say, 11, you, starting from the first page, should find the link to follow. To do this, you need to find the pair of values less than and greater than 11. In this case, you should follow the link which is between 8 and 12. Then you search for the next pair, etc, until you find your value or reach the end and make sure that your value is not there.

Following the links is quite simple, but how does the engine search for the values within one page?

This depends on how you declared the table.

MyISAM supports two algorithms for storing the index keys in a page: packed keys and unpacked keys.

Read the rest of this entry »

Searching for arbitrary portions of a date

Comments enabled. I *really* need your comment

From Stack Overflow:

I have a Ruby on Rails application with a PostgreSQL database; several tables have

created_atandupdated_attimestamp attributes.When displayed, those dates are formatted in the user's locale; for example, the timestamp 2009-10-15 16:30:00.435 becomes the string 15.10.2009 - 16:30 (the date format for this example being

dd.mm.yyyy - hh.mm).The requirement is that the user must be able to search for records by date, as if they were strings formatted in the current locale.

For example, searching for 15.10.2009 would return records with dates on October 15th 2009; searching for 15.10 would return records with dates on October 15th of any year, searching for 15 would return all dates that match 15 (be it day, month or year).

The simplest solution would be just retrieve the locale string from the client, format the dates according to that string and search them using LIKE or ~ operators (the latter, as we all know, searches for POSIX regular expressions).

However, this would be not very efficient.

Let's create a sample table and see:

Read the rest of this entry »

Join on overlapping date ranges

Comments enabled. I *really* need your comment

This post is inspired by a discussion with John Didion:

Is there any way to optimize the query for overlapping ranges in MySQL if both ranges are dynamic?

I have two tables, each with integer range columns (specified as

LineString), and I want to find rows that overlap.No matter what I try, the query planner never uses any indexes.

This question addresses a well-known problem of efficient searching for the intersecting intervals. The queries that deal with it require ability to search for the intervals (stored in two distinct columns) containing a constant scalar value.

Plain B-Tree indexes used by most databases do not speed up the queries like that. However, MySQL supports SPATIAL indexes that can index two-dimensional shapes and efficiently search for the shapes containing a given point.

With a little effort, time intervals can be converted into the geometrical objects, indexed with a SPATIAL index and searched for the given point in time (also presented as a gemetrical object). This is described in the article about overlapping ranges in MySQL.

The query described in that article, however, searches for the intervals overlapping a constant range, provided as a parameter to the query. Now, I will discuss how to adapt the query for a JOIN between two tables.

Let's create two sample tables, each containing a set of time ranges stored as geometrical objects, and find all records from both tables whose ranges overlap:

Read the rest of this entry »

Aggregates: subqueries vs. GROUP BY

From Stack Overflow:

I have a table

usersand there is a fieldinvited_by_idshowing user id of the person who invited this user.I need to make a MySQL query returning rows with all the fields from users plus a

invites_countfield showing how many people were invited by each user.

The task seems very simple (and it is in fact), but there are at least two approaches to do it. It this article, I will discuss the benefits and the drawbacks of each approach.

GROUP BY

The first approach is using GROUP BY:

SELECT u.*, COUNT(ui.id)

FROM users u

LEFT JOIN

users ui

ON ui.invited_by = u.id

GROUP BY

u.id

This is a GROUP BY with a self join, very simple. There are only two little things I'd like to pay some attention to.

First, we need to select all users, even those who invited no other members. An inner join would leave them out, so we use a LEFT JOIN, and use COUNT(ui.id) instead of COUNT(*), because, due to the very nature of aggregation, COUNT(*) returns at least 1 in a query with GROUP BY, and COUNT(ui.id) skips NULLs (which can only result from a LEFT JOIN miss).

Second, we group by u.id but use u.* in the SELECT clause. Every other engine would fail in this case, but MySQL allows selecting fields that are neighter grouped by nor aggregated. These fields will return an arbitrary value from any of the aggregated records (in practice, that is the record first read in its group).

This behavior is often abused, since MySQL does not guarantee what exactly it will return, but it's perfectly valid for the queries like ours. We don't need to clutter the GROUP BY clause with all fields from users if we have already grouped by the PRIMARY KEY which is already unique. All other values from users are uniquely defined by the PRIMARY KEY so there is no matter which arbitrary record will the query use to return ungrouped values: they are all same within the group.

Subquery

This solution involves correlated subqueries:

SELECT u.*,

(

SELECT COUNT(*)

FROM users ui

WHERE ui.invited_by = u.id

)

FROM users u

Here, we calculate the COUNT(*) in a correlated subquery. This query returns exactly same records as the previous one.

Comparison

Let's create two sample tables (a MyISAM one and an InnoDB one) and see which solution is more efficient for different scenarios:

Read the rest of this entry »

Counting concurrent sessions

Comments enabled. I *really* need your comment

Answering questions asked on the site.

Steve asks:

I am trying to query a log table for a web service application and determine how many concurrent sessions are in progress at each moment a transaction is executed, based on a start date and an elapsed time for each command executed through the web service. (These metrics are logged after the fact, I'm trying to write daily performance reporting for the site).

Here's a simplified view of my base table design:

CREATE TABLE CONNECTIONS ( USERID VARCHAR2(30), HANDLE VARCHAR2(40), PROCESS_DATE DATE, COMMAND NUMBER(6,0), COUNT NUMBER(10,0), ELAPSED_TIME NUMBER(10,0), NUM_OF_RECS NUMBER(10,0), COMMAND_TIMESTAMP TIMESTAMP (6) )

The question is: at there moment each transaction started, how many other transactions were active?

At each given moment, there is some number of active transaction. A transaction is active if the transaction begins before that moment and ends after it. This means that the moment should fall between command_timestamp and command_timestamp + elapsed_time / 86400000.

Database B-Tree indexes are not very good in queries that involve searching for a constant between two columns, so a self-join on the condition described above would be possible but not very efficient.

But these is a more simple solution.

Whenever a transaction starts, it increments the count of the open transactions. Whenever the transaction ends, it decrements it.

So we just can build a table of events

: starts and ends of the transactions, ordered chronologically. Each start

would be denoted with a +1, and each end

with a -1. Then we should just calculate the number of the transactions open so far and subtract the number of the transactions closed.

This can be easily done merely by calculating the partial sum of these +1's and -1's, which is an easy task for Oracle's analytic functions.

Let's create a sample table. I'll put only the relevant columns there and add a stuffing column that would emulate actual payload, to measure performance:

Read the rest of this entry »

Subscribe in a reader

Subscribe in a reader