Archive for October, 2009

MySQL ORDER BY / LIMIT performance: late row lookups

From Stack Overflow:

When I run an SQL command like the one below, it takes more than 15 seconds:

SELECT * FROM news WHERE cat_id = 4 ORDER BY id DESC LIMIT 150000, 10

EXPLAINshows that its usingwhereand the index on(cat_id, id)

LIMIT 20, 10on the same query only takes several milliseconds.

This task can be reformulated like this: take the last 150,010 rows in

id order and return the first 10 of them

It means that though we only need 10 records we still need to count off the first 150,000.

The table has an index which keeps the records ordered. This allows us not to use a filesort. However, the query is still far from being efficient: 15 seconds for 150,000 records (which are already ordered) is way too much.

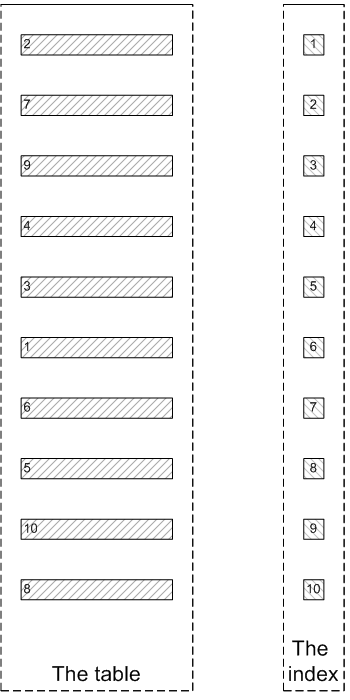

To better understand the reason behind the low performance let's look into this picture:

As we already said before, there is an index created on the table. Logically, an index is a part of a table which is not even visible from the SQL side: all queries are issued against the table, not the index, and the optimizer decides whether to use the index or not.

However, physically, an index is a separate object in the database.

An index is a shadow copy of the table which stores some subset of the table's data:

- Index key, i. e. the columns which the index created on.

- Table pointer, that is some value that uniquely identifies a row the record reflects. In InnoDB, it is the value of the

PRIMARY KEY; in MyISAM, it is an offset in the.MYDfile.

The index records are stored in a B-Tree structure which make the ref and range searching on them super fast.

However, the index itself does not contain all table's data: only the subset we described above. To find the actual table values while preserving order, one needs to join the index and the table. That is for each index record the engine should find the corresponding table record (using the row pointer) and return all non-indexed values from the table itself.

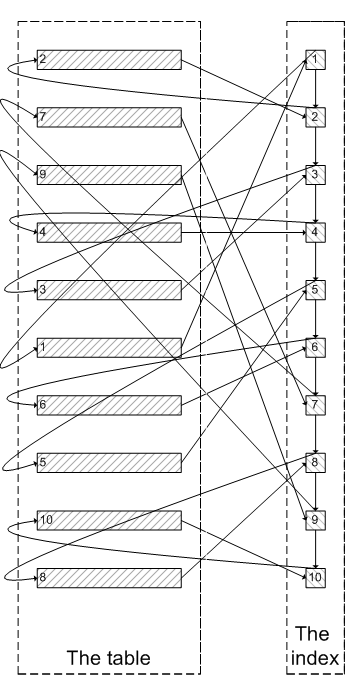

Here's how it looks:

The process of fetching the table records corresponding to the index records is called row lookup. It is pictured by the curvy arrows connecting the index and the table.

Since the index records and the table records are located far away from each other in the memory and on the disk, the row lookup leads to much more page misses, cache misses and disk seeks that a sequential access and is therefore quite costly. It takes much time to traverse all the connectors on the picture above.

If we do a plain query which returns all the records we of course need to fetch all the records and each row lookup is necessary.

But do we really need it when we use a LIMIT clause with the offset greater than 0?

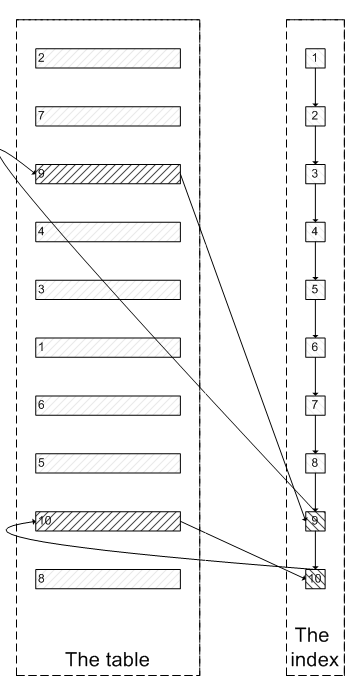

If we did something like LIMIT 8, 2 we could just skip the first 8 values using the index and the return the remaining two:

We see that this is a much more efficient algorithm that will save us lots of row lookups.

This is called late row lookup: the engine should look a row up only if there is no way to avoid it. If there is a chance that a row will be filtered out by using the indexed fields only, it should be done before the rows are looked up in the actual MySQL table. There is no point in fetching the records out of the table just to discard them.

However, MySQL always does early row lookup: it searches for a row prior to checking values in the index, even the optimizer decided to use the index.

Let's create a sample table and try to reproduce this behavior:

CREATE TABLE filler (

id INT NOT NULL PRIMARY KEY AUTO_INCREMENT

) ENGINE=Memory;

CREATE TABLE t_limit (

id INT NOT NULL,

value VARCHAR(20) NOT NULL,

stuffing VARCHAR(200) NOT NULL,

CONSTRAINT pk_limit_id PRIMARY KEY (id)

) ENGINE=MyISAM DEFAULT CHARSET=utf8;

DELIMITER $$

CREATE PROCEDURE prc_filler(cnt INT)

BEGIN

DECLARE _cnt INT;

SET _cnt = 1;

WHILE _cnt <= cnt DO

INSERT

INTO filler

SELECT _cnt;

SET _cnt = _cnt + 1;

END WHILE;

END

$$

DELIMITER ;

START TRANSACTION;

CALL prc_filler(200000);

COMMIT;

INSERT

INTO t_limit

SELECT id, CONCAT('Value ', id), RPAD('', 200, '*')

FROM filler;

This MyISAM table contains 200,000 records and has a PRIMARY KEY index on id. Each record is filled with 200 bytes of stuffing data.

Here's the query to select values from 150,001 to 150,010:

SELECT id, value, LENGTH(stuffing) AS len

FROM t_limit

ORDER BY

id

LIMIT 150000, 10

This query works for almost 6 seconds which is way too long.

It, however, uses a filesort which the optimizer considered more efficient than using the index. This would make sense if not for the stuffing field which is too long to be sorted efficiently. In this very case traversing the index would be faster.

Let's try to force the index:

SELECT id, value, LENGTH(stuffing) AS len

FROM t_limit FORCE INDEX (PRIMARY)

ORDER BY

id

LIMIT 150000, 10

Now it is only 1.23 seconds but still too long due to the early row lookups.

We, however, can trick MySQL to use the late row lookups.

We will only select the id in the subquery with an ORDER BY and LIMIT and then join the original table back on id.

This will make each individual row lookup less efficient, since each join will require looking up the index value again. However, this is not much of a deal, and the total number of lookups will be reduced greatly, so overall performance increase is expected:

SELECT l.id, value, LENGTH(stuffing) AS len

FROM (

SELECT id

FROM t_limit

ORDER BY

id

LIMIT 150000, 10

) o

JOIN t_limit l

ON l.id = o.id

ORDER BY

l.id

This is only 75 ms, or 16 times as fast as the previous query.

Note that we put an additional ORDER BY to the end of the query, since the order is not guaranteed to be preserved after the join. This resulted in an extra filesort in the plan. However, the actual plan used outputs the values already sorted and this filesort, therefore, will require only a single pass over 10 values which is instant.

Generating row placeholders: SQL Server

Comments enabled. I *really* need your comment

Answering questions asked on the site.

Vladimir asks:

I have two tables: one contains document headers, the other one contains document items.

The document header defines how many items should be in this document (usually 10 or 15, but this can vary).

I need to generate a list of documents and a list of document items to feed them to a third party control. Normally, this could be easily achieved by doing a

SELECT *from both tables, but there is a problem. The control requires that the number of items for each document is equal to the value of the columns in the document header.That is, if document header says 15 items, there should be exactly 15 records for this document in the items rowset, even if there are less items in the actual table. It's OK if the extra items have a value of NULL.

I tried to use

LEFT JOINandFULL JOINbut cannot figure out how to use them.

This is quite a common problem in SQL world.

SQL as such has no means to generate sets, only to transform them. In classical SQL, if we have a relation that contains 2 records on input and need to have a relation that contains 16 records on output, then we will need to do at least 4 cross joins — just to have enough rows to begin with.

There are two solutions to that problem.

First is having a dummy rowset handy: create a table of 1,000,000 records or use some kind of a system table whose only purpose is to provide enough rows (the actual values of them can be completely ignored).

Second solution is to generate rows on the fly using system-provided means. This is impossible in MySQL, however, all other major systems can do it using generate_series (PostgreSQL, the only system that has a native tool specially for this purpose); CONNECT BY query (Oracle); or, in your case, a recursive CTE (SQL Server).

Recursive queries are somewhat less efficient than stored tables, since they require looping and therefore having much logical reads, while reading from a dummy table requires but a few table scans.

However, the recursive queries also have several advantages:

- They are always available, even if you querying a database that lacks a dummy table and you don't have a write access to the database

- They always have enough records

- They have consistent plans. If the dummy table contains lots of records and the optimizer chooses a fullscan, this can kill performance

That's why I'll base my solution on the recursive CTE.

The main idea to generate a list of document items is simple:

- Each document header we should replicate exactly as many times as the header says (using a recursive CTE), with an ordinal number

- We also should assign an ordinal number to each document item, using

ROW_NUMBER() - Finally, we should just left join the two resultsets

The query will look like this:

Read the rest of this entry »

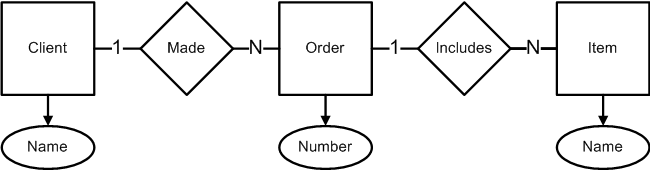

What is entity-relationship model?

Image by Samuel Mann

A relational database, as we all know from the previous article, stores relations between integers, strings and other simple types in a very plain way: it just enumerates all values related.

This model is extremely flexible, since other relations can be easily derived from existing ones using mathematical formulae, and the relational database takes care of that.

However, database should reflect the real world in some way to be really of use. Since the relational databases store relations between mathematical abstractions and not real world things, we should make some kind of a mapping of ones to the others.

This is what entity-relationship model is for.

An entity-relationship model, as one can easily guess from its name, models relationships between entities.

But since we know that databases do essentially the same, how does it differ from the database model?

- An entity-relationship model states which data and relations between them should be stored

- A database model states how these relations are stored

In other words, ER model is design and database model is one of the ways to implement it. ER model is said to be above the database model in the waterfall developement.

Note that in both cases I use the word stored above. The model says nothing of data and relations between them that may or should exist, only of those that should be stored. Every human being participates in thousands if not millions relationships, but an ER model should state which of them are to be stored, and a relational model should decide how to store them. Of course it would be nice to store them all (and police and insurance would be just happy), but the technology does not allow it yet. Don't know whether it is good or bad.

A typical entity-relationship diagram in Peter Chen's notation looks like this:

What does it mean?

Read the rest of this entry »

Constraints and the optimizer in SQL Server: FOREIGN KEY

Comments enabled. I *really* need your comment

Continuing on from the yesterday's article.

I was wondering: do the constraints declared on tables in SQL Server affect the decisions made by the optimizer?

SQL Server allows to define the following constraints on the columns:

PRIMARY KEYUNIQUEFOREIGN KEYCHECKDEFAULT

Today we will see how FOREIGN KEY affect the plans.

A FOREIGN KEY guarantees that every value of the column constrained with it is contained in a PRIMARY KEY or UNIQUE column of another table.

This fact can be used to simpify joins on these columns and using IN clause.

Let's create the sample tables:

Read the rest of this entry »

Constraints and the optimizer in SQL Server: PRIMARY KEY and UNIQUE

Comments enabled. I *really* need your comment

Answering questions asked on the site.

Mitch asks:

I was wondering: do the constraints declared on tables in SQL Server affect the decisions made by the optimizer?

SQL Server allows to define the following constraints on the columns:

PRIMARY KEYUNIQUEFOREIGN KEYCHECKDEFAULT

Since these constraints imply some restrictions on data, it gives a theoretical possibility to use these restrictions while optimizing the queries so that some extra checks (which had already been made by the constraints) can be skipped.

SQL Server does use these possibilities and optimizes its plans taking the constraints into account.

In this article we will see how SQL Server uses PRIMARY KEY and UNIQUE to optimize the plans.

PRIMARY KEY and UNIQUE

Let's create a pair of sample tables:

Read the rest of this entry »

Running root mean square: SQL Server

Comments enabled. I *really* need your comment

From Stack Overflow:

Lets say I have the following in a SQL Server table:

Value 1 3 2 6 3 I need to get the differences of each of these numbers (in order), then square them, sum them, divide by number of values and take the square root.

This value is called root mean square. It is widely used in statistics.

However, we should calculate it for the differences between the values, not for the values themselves. In Oracle, the differences between rows could be calculated easilty using LAG, an analytical function that returns a previous row. Unfortunately, SQL Server lacks this function.

It could be emulated using a subquery or CROSS APPLY along with ORDER BY and TOP, but this makes the query less elegant and less efficient for large tables (since using TOP in subqueries and CROSS APPLY forces NESTED LOOPS).

It is better to assign the row number to each row (using ROW_NUMBER) and then just make an equijoin between two consecutive rows. This looks much better and the optimizer is free to choose any join method, including Hash Join which is most efficient when large percent of the table rows participates in a join, or a Merge Join which will be also efficient, given that ROW_NUMBER() tends to output the ordered resultsets and SQL Server can utilize this fact.

Here's the query:

WITH nums AS ( SELECT value, ROW_NUMBER() OVER (ORDER BY orderer) AS rn FROM source ) SELECT SQRT(AVG(POWER(np.value - nn.value, 2))) FROM nums np JOIN nums nn ON nn.rn = np.rn + 1

And let's try it on the sample data:

WITH source (orderer, value) AS ( SELECT 10, 1 UNION ALL SELECT 20, 3 UNION ALL SELECT 30, 2 UNION ALL SELECT 40, 6 UNION ALL SELECT 50, 3 ), nums AS ( SELECT value, ROW_NUMBER() OVER (ORDER BY orderer) AS rn FROM source ) SELECT SQRT(AVG(POWER(np.value - nn.value, 2))) FROM nums np JOIN nums nn ON nn.rn = np.rn + 1

| 2.6457513110645907 | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| 1 row fetched in 0.0001s (0.0007s) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

Table 'Worktable'. Scan count 0, logical reads 0, physical reads 0, read-ahead reads 0, lob logical reads 0, lob physical reads 0, lob read-ahead reads 0. SQL Server Execution Times: CPU time = 0 ms, elapsed time = 1 ms.

|--Compute Scalar(DEFINE:([Expr1027]=sqrt(CONVERT_IMPLICIT(float(53),[Expr1026],0))))

|--Compute Scalar(DEFINE:([Expr1026]=CASE WHEN [Expr1038]=(0) THEN NULL ELSE [Expr1039]/CONVERT_IMPLICIT(int,[Expr1038],0) END))

|--Stream Aggregate(DEFINE:([Expr1038]=COUNT_BIG(power([Union1011]-[Union1024],(2.000000000000000e+000))), [Expr1039]=SUM(power([Union1011]-[Union1024],(2.000000000000000e+000)))))

|--Merge Join(Inner Join, MANY-TO-MANY MERGE:([Expr1025])=([Expr1033]), RESIDUAL:([Expr1025]=([Expr1012]+(1))))

|--Sequence Project(DEFINE:([Expr1025]=row_number))

| |--Compute Scalar(DEFINE:([Expr1035]=(1)))

| |--Segment

| |--Sort(ORDER BY:([Union1023] ASC))

| |--Constant Scan(VALUES:(((10),(1)),((20),(3)),((30),(2)),((40),(6)),((50),(3))))

|--Compute Scalar(DEFINE:([Expr1033]=[Expr1012]+(1)))

|--Sequence Project(DEFINE:([Expr1012]=row_number))

|--Compute Scalar(DEFINE:([Expr1037]=(1)))

|--Segment

|--Sort(ORDER BY:([Union1010] ASC))

|--Constant Scan(VALUES:(((10),(1)),((20),(3)),((30),(2)),((40),(6)),((50),(3))))

The query works, uses the Merge Join and gives a correct result.

Minimum and maximum on bit fields: SQL Server

Comments enabled. I *really* need your comment

From Stack Overflow:

I have this query in SQL Server 2005:

SELECT * FROM tblJobs AS j -- joining other tables here WHERE Closed = 0 AND Invoiced = 0 AND Active = 1 AND DepartmentId <> 2 AND dbo.fncIsAllPointsDelivered(JobID) = 1This query is taking too long to run, and I know the problem is the UDF:

dbo.fncIsAllPointsDelivered(J.JobID) = 1The SQL for the UDF is here:

DECLARE @DetailCount int DECLARE @TrackingCount int SELECT @DetailCount = COUNT(*) FROM tblLoadDetails WHERE JobId = @JobId SELECT @TrackingCount = COUNT(*) FROM tblLoadDetails WHERE JobId = @JobId AND Delivered = 1 IF (@DetailCount = @TrackingCount AND @DetailCount > 0) RETURN 1 RETURN 0All of this runs blazingly fast unless the job has a large number of load details in it.

I am trying to think of a way to either make the UDF faster or get rid of the need for the UDF, but I am at a loss.

UDF is definitely a problem here, since the conditions based on the UDF's are not sargable, that is an index cannot be used to search for them.

Let's look closed into this UDF.

Given a @JobID, the function searches the load details for this job. For the function to succeed, all load details should have the state of Delivered, and there must be at least one load detail for this job.

The UDF can be rewritten using IN and NOT IN conditions. The first condition makes sure that there is at least one load detail for the given job, the second one ensures that all load details have state Delivered.

Let's create a sample table and see how it works:

Read the rest of this entry »

Oracle: nested SUM

From Stack Overflow:

Suppose I have this table

A B C D Datatypes are not important.

I want to do this:

SELECT a AS a1, b AS b1, c AS c1, ( SELECT SUM(d) FROM source WHERE a = a1 AND b = b1 ) AS total FROM source GROUP BY a, b, c, but I can't find a way (SQL Developer keeps complaining with

.)FROMclause not foundIs there a way? Is it possible?

This is of course possible if we just alias the query and prepend the alias to the field:

SELECT a, b, c, ( SELECT SUM(d) FROM source si WHERE si.a = so.a AND si.b = so.b CONNECT BY 16 >= level ) FROM source so GROUP BY a, b, c

This works well on this sample set of data:

WITH source AS ( SELECT FLOOR(MOD(level - 1, 8) / 4) + 1 AS a, FLOOR(MOD(level - 1, 4) / 2) + 1 AS b, MOD(level - 1, 2) + 1 AS c, level AS d FROM dual CONNECT BY 16 >= level ) SELECT a, b, c, ( SELECT SUM(d) FROM source si WHERE si.a = so.a AND si.b = so.b ) FROM source so GROUP BY a, b, c

, but it needs to reevaluate the subquery for each group.

In Oracle, there is a better way to do this query: nesting an aggregate SUM inside an analytical SUM.

Read the rest of this entry »

IN list vs. range condition: MySQL

Answering questions asked on the site.

Princess asks:

Hello, I've got a problem with SQL.

I have a table which contains the production details for the factories. They are not factories and items of course but I cannot disclose the project and need to obfuscate so let's pretend they are :)

I need to select the items for which the first 5 factories have low production rate.

I tried to do the query like this:

SELECT ProductionItem FROM FactoryProductions WHERE 5 >= FactoryID AND 100 >= ProductionAmountwhich returns correct results but is slow.

I have an index on

(FactoryID, ProductionAmount).There are 13 Factories and 2,300,000 Items

This is in MySQL

This is a nice illustration of how index range evaluation works in MySQL and how to optimize it.

We will assume that the ProductionAmount is usually much higher than 100

Now, let's create a sample table:

Read the rest of this entry »

Shuffling rows: PostgreSQL

Comments enabled. I *really* need your comment

Answering questions asked on the site.

Josh asks:

I am building a music application and need to create a playlist of arbitrary length from the tracks stored in the database.

This playlist should be shuffled and a track can repeat only after at least 10 other tracks had been played.

Is it possible to do this with a single SQL query or I need to create a cursor?

This is in PostgreSQL 8.4

PostgreSQL 8.4 is a wise choice, since it introduces some new features that ease this task.

To do this we just need to keep a running set that would hold the previous 10 tracks so that we could filter on them.

PostgreSQL 8.4 supports recursive CTE's that allow iterating the resultsets, and arrays that can be easily used to keep the set of 10 latest tracks.

Here's what we should do to build the playlist:

- We make a recursive CTE that would generate as many records as we need and just use LIMIT to limit the number

- The base part of the CTE is just a random record (fetched with

ORDER BY RANDOM() LIMIT 1) - The base part also defines the queue. This is an array which holds 10 latest records selected. It is initialized in the base part with the

idof the random track just selected - The recursive part of the CTE joins the previous record with the table, making sure that no record from the latest 10 will be selected on this step. To do this, we just use the array operator

<@(contained by) - The recursive part adds newly selected record to the queue. The queue should be no more than 10 records long, that's why we apply array slicing operator to it (

[1:10])

Let's create a sample table:

Read the rest of this entry »

Subscribe in a reader

Subscribe in a reader