Archive for the ‘MySQL’ Category

MySQL ORDER BY / LIMIT performance: late row lookups

From Stack Overflow:

When I run an SQL command like the one below, it takes more than 15 seconds:

SELECT * FROM news WHERE cat_id = 4 ORDER BY id DESC LIMIT 150000, 10

EXPLAINshows that its usingwhereand the index on(cat_id, id)

LIMIT 20, 10on the same query only takes several milliseconds.

This task can be reformulated like this: take the last 150,010 rows in

id order and return the first 10 of them

It means that though we only need 10 records we still need to count off the first 150,000.

The table has an index which keeps the records ordered. This allows us not to use a filesort. However, the query is still far from being efficient: 15 seconds for 150,000 records (which are already ordered) is way too much.

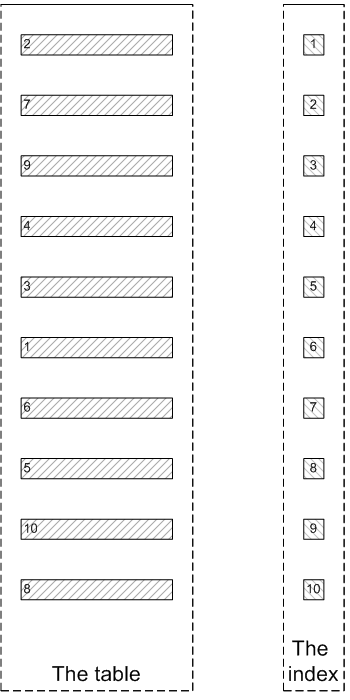

To better understand the reason behind the low performance let's look into this picture:

As we already said before, there is an index created on the table. Logically, an index is a part of a table which is not even visible from the SQL side: all queries are issued against the table, not the index, and the optimizer decides whether to use the index or not.

However, physically, an index is a separate object in the database.

An index is a shadow copy of the table which stores some subset of the table's data:

- Index key, i. e. the columns which the index created on.

- Table pointer, that is some value that uniquely identifies a row the record reflects. In InnoDB, it is the value of the

PRIMARY KEY; in MyISAM, it is an offset in the.MYDfile.

The index records are stored in a B-Tree structure which make the ref and range searching on them super fast.

However, the index itself does not contain all table's data: only the subset we described above. To find the actual table values while preserving order, one needs to join the index and the table. That is for each index record the engine should find the corresponding table record (using the row pointer) and return all non-indexed values from the table itself.

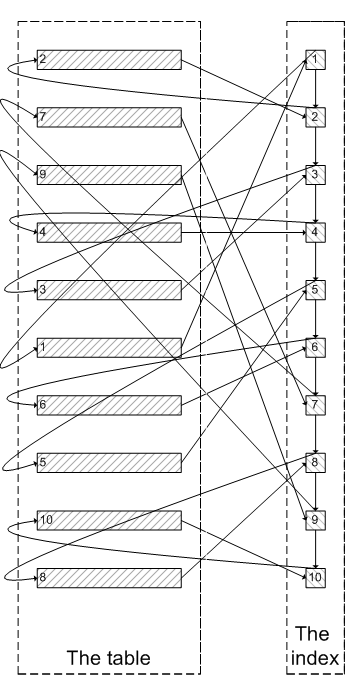

Here's how it looks:

The process of fetching the table records corresponding to the index records is called row lookup. It is pictured by the curvy arrows connecting the index and the table.

Since the index records and the table records are located far away from each other in the memory and on the disk, the row lookup leads to much more page misses, cache misses and disk seeks that a sequential access and is therefore quite costly. It takes much time to traverse all the connectors on the picture above.

If we do a plain query which returns all the records we of course need to fetch all the records and each row lookup is necessary.

But do we really need it when we use a LIMIT clause with the offset greater than 0?

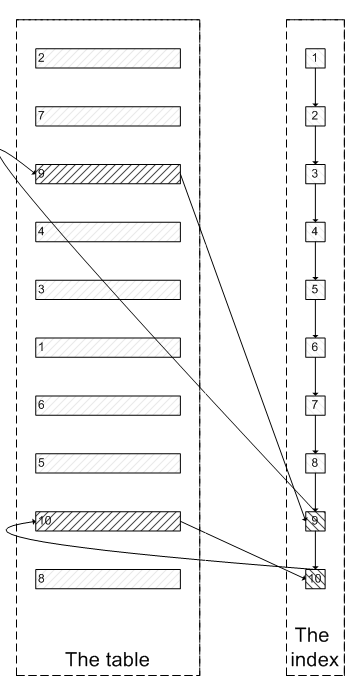

If we did something like LIMIT 8, 2 we could just skip the first 8 values using the index and the return the remaining two:

We see that this is a much more efficient algorithm that will save us lots of row lookups.

This is called late row lookup: the engine should look a row up only if there is no way to avoid it. If there is a chance that a row will be filtered out by using the indexed fields only, it should be done before the rows are looked up in the actual MySQL table. There is no point in fetching the records out of the table just to discard them.

However, MySQL always does early row lookup: it searches for a row prior to checking values in the index, even the optimizer decided to use the index.

Let's create a sample table and try to reproduce this behavior:

CREATE TABLE filler (

id INT NOT NULL PRIMARY KEY AUTO_INCREMENT

) ENGINE=Memory;

CREATE TABLE t_limit (

id INT NOT NULL,

value VARCHAR(20) NOT NULL,

stuffing VARCHAR(200) NOT NULL,

CONSTRAINT pk_limit_id PRIMARY KEY (id)

) ENGINE=MyISAM DEFAULT CHARSET=utf8;

DELIMITER $$

CREATE PROCEDURE prc_filler(cnt INT)

BEGIN

DECLARE _cnt INT;

SET _cnt = 1;

WHILE _cnt <= cnt DO

INSERT

INTO filler

SELECT _cnt;

SET _cnt = _cnt + 1;

END WHILE;

END

$$

DELIMITER ;

START TRANSACTION;

CALL prc_filler(200000);

COMMIT;

INSERT

INTO t_limit

SELECT id, CONCAT('Value ', id), RPAD('', 200, '*')

FROM filler;

This MyISAM table contains 200,000 records and has a PRIMARY KEY index on id. Each record is filled with 200 bytes of stuffing data.

Here's the query to select values from 150,001 to 150,010:

SELECT id, value, LENGTH(stuffing) AS len

FROM t_limit

ORDER BY

id

LIMIT 150000, 10

This query works for almost 6 seconds which is way too long.

It, however, uses a filesort which the optimizer considered more efficient than using the index. This would make sense if not for the stuffing field which is too long to be sorted efficiently. In this very case traversing the index would be faster.

Let's try to force the index:

SELECT id, value, LENGTH(stuffing) AS len

FROM t_limit FORCE INDEX (PRIMARY)

ORDER BY

id

LIMIT 150000, 10

Now it is only 1.23 seconds but still too long due to the early row lookups.

We, however, can trick MySQL to use the late row lookups.

We will only select the id in the subquery with an ORDER BY and LIMIT and then join the original table back on id.

This will make each individual row lookup less efficient, since each join will require looking up the index value again. However, this is not much of a deal, and the total number of lookups will be reduced greatly, so overall performance increase is expected:

SELECT l.id, value, LENGTH(stuffing) AS len

FROM (

SELECT id

FROM t_limit

ORDER BY

id

LIMIT 150000, 10

) o

JOIN t_limit l

ON l.id = o.id

ORDER BY

l.id

This is only 75 ms, or 16 times as fast as the previous query.

Note that we put an additional ORDER BY to the end of the query, since the order is not guaranteed to be preserved after the join. This resulted in an extra filesort in the plan. However, the actual plan used outputs the values already sorted and this filesort, therefore, will require only a single pass over 10 values which is instant.

IN list vs. range condition: MySQL

Answering questions asked on the site.

Princess asks:

Hello, I've got a problem with SQL.

I have a table which contains the production details for the factories. They are not factories and items of course but I cannot disclose the project and need to obfuscate so let's pretend they are :)

I need to select the items for which the first 5 factories have low production rate.

I tried to do the query like this:

SELECT ProductionItem FROM FactoryProductions WHERE 5 >= FactoryID AND 100 >= ProductionAmountwhich returns correct results but is slow.

I have an index on

(FactoryID, ProductionAmount).There are 13 Factories and 2,300,000 Items

This is in MySQL

This is a nice illustration of how index range evaluation works in MySQL and how to optimize it.

We will assume that the ProductionAmount is usually much higher than 100

Now, let's create a sample table:

Read the rest of this entry »

Bitwise operations and indexes

From Stack Overflow:

Are the following queries efficient in MySQL:

SELECT * FROM table WHERE field & number = number -- to find values with superset of number's bitsSELECT * FROM table WHERE field | number = number -- to find values with subset of number's bits, if an index for the

fieldhas been created?If not, is there a way to make it run faster?

An index can be used for the following things:

- To limit the number of records scanned

- To lower the number of row lookups

When doing a full table scan, every record should be fetched and examined. If the table contains say, 1,000,000 records, and each record is 100 bytes long, then 100 Mb worth of data should be processed. These data are usually scanned sequentially.

An index row, on the other hand, contains the key value, the row pointer and some additional information (pointers to the parents and children). On a MyISAM table, each row of the index on an INT column occupies 10 bytes (4 (sizeof(INT)) + 6 (default MyISAM pointer size)) plus some overhead (block headers, leaf pointers etc).

Even if we cannot build a range on the index and need to look over all index values, 10 Mb is far less than 100 Mb.

However, scanning the index has two drawbacks:

- Traversing the B-Tree is more slow than a full table scan, since the former is not sequential

- When the condition we search for matches the index key, we should follow the pointer to fetch the unindexed values from the table row. This also takes some time.

Both these things can be optimized:

- Oracle has

INDEX FAST FULL SCANaccess method. It scans the index rows in their physical order, not logical. The key order is not maintained in such a scan, but we not always need it anyway.This makes the index scanning sequential (and taking the constant time)

- PostgreSQL has bitmap access method. PostgreSQL cannot disregard the index order as Oracle does so it has to traverse the tree anyway. But instead of following the pointer to fetch the values it fills a special bitmap with a bit set for each matching row.

The order of bits corresponds to the physical order of rows.

When it's over with setting bits it just reads the bitmap and fetches the rows with the bits set. Since the bits are ordered, the rows fetched are ordered too, so this scan is also sequential. Scanning the bitmap takes constant time (which is usually negligible since bitmaps are very small), pure time for fetching the rows depends on the number of bits set but is no longer than a full scan (since the scan is sequential).

MySQL, however, does neither of these optimizations.

Adjacency list vs. nested sets: MySQL

Continuing the series:

What is better to store hierarchical data: nested sets model or adjacency list (parent-child) model?

For detailed explanations of the terms, see the first article in the series:

This is the last article of the series which covers MySQL.

MySQL differs from the other systems, since it is the only system of the big four that does not support recursion natively. It has neither recursive CTE's nor CONNECT BY clause, not even rowset returning functions that help to emulate recursion in PostgreSQL 8.3.

MySQL supports a thing that all other systems either lack or implement inefficiently: session variables. They can be set in a SELECT clause and can be used to keep some kind of a state between the rows as they are processed and returned in a rowset.

This of course is against the whole spirit of SQL, since SQL implies operations on whole sets and session variables operate on rows and are totally dependent on the order they are returned or processed. But if used properly, this behavior can be exploited to emulate some things that MySQL lacks: analytic functions, efficient random row sampling etc.

Hierarchical functions are among the things that need to be emulated in MySQL using session variables to keep the function state.

Here's the old article in my blog that shows how to do this:

On the other hand, MySQL implements one more thing that is useful for nested sets model: SPATIAL indexes.

Read the rest of this entry »

NOT IN vs. NOT EXISTS vs. LEFT JOIN / IS NULL: MySQL

Which method is best to select values present in one table but missing in another one?

This:

SELECT l.* FROM t_left l LEFT JOIN t_right r ON r.value = l.value WHERE r.value IS NULL, this:

SELECT l.* FROM t_left l WHERE l.value NOT IN ( SELECT value FROM t_right r )or this:

SELECT l.* FROM t_left l WHERE NOT EXISTS ( SELECT NULL FROM t_right r WHERE r.value = l.value )

Finally, it's MySQL time.

As always, we will create the sample tables:

MySQL: emulating ROW_NUMBER with multiple ORDER BY conditions

Comments enabled. I *really* need your comment

Answering questions asked on the site.

Magerio asks:

Hello, thanks for your great post about advanced row sampling, it helped me a lot.

I have a question.

I am using your SQL query to show a portfolio of products and these products have a certain integer quality level (the lower, the better).

What if I want to show the best 3 products of each category?

Just a quick reminder: the article mentioned describes a query to emulate ROW_NUMBER() in MySQL. Since MySQL does not support analytic (window) functions natively, we had to do some kind of a trick to mimic their behaviour.

The original article described a query to obtain 15 first id's for each grouper. The idea behind this query was quite simple:

- Take a list of groupers using

DISTINCT - For each grouper, find the 15th

idusingLIMITin a correlated subquery. We have now a set of(grouper, id15)pairs: for any givengrouperwe have theidof the 15th row (ordered byid). - Join this resultset with the main table so that for each

grouper, theid's less or equal to the 15thidare selected. We then will have 15 lowestid's for eachgrouperthat is exactly what we were after.

This emulated ROW_NUMBER() OVER (PARTITION BY grouper ORDER BY id): a function with a single PARTITION BY expression and a single ORDER BY expression.

But in your case there are multiple ORDER BY expressions. It may be not so obvious (since the question mentions only one ordering criterion, namely, quality). However, the solution above relied on the fact that the field in ORDER BY was unique, so we now that there are exactly 15 rows with id less or equal to the id returned with LIMIT 15. Since this is most probably not the case with quality, we should include id into the ORDER BY clause so that it makes the ordering conidition unique.

A subquery in MySQL, however, can only return a single value.

To work around this, we should use two subqueries that will return us a single column in an ORDER BY condition.

Let's create a sample table:

Read the rest of this entry »

MySQL: matching whole sets

Comments enabled. I *really* need your comment

From Stack Overflow:

I have a database that stores products

available on the marketand productsstill in developmentin two separate tables (market_productanddev_product).A third table (

substance) contains all substances a product can made of.Other two tables (

marked_product_companddev_product_comp) mantains product compositions.I want to select products still in development that are made of the same ingredients of marketed products.

This means that we need to return every dev_product that has at least one market_product with complete substance match.

In the following example:

| DevProductID | Substance |

|---|---|

| 1 | Apple |

| 1 | Banana |

| 2 | Apple |

| 2 | Banana |

| 2 | Cabbage |

| MarketProductID | Substance |

|---|---|

| 1 | Apple |

| 2 | Apple |

| 2 | Banana |

| 3 | Apple |

| 3 | Banana |

| 3 | Cabbage |

| 3 | Daikon |

we should return development product 1, since it's made of apples and bananas, and there is at least one product already on market (namely, market product 2) that is made of exactly same things and nothing else.

We should not return development product 3, because these is no complete match with market product 3, since is daikons are used to make the latter but not the former.

In one of the previous articles I wrote about a similar task in SQL Server:

However, this used FULL OUTER JOIN which MySQL lacks.

Let's see how to implement this task in MySQL:

MySQL: difference between sets

From Stack Overflow:

I have a table that holds data about items that existed at a certain time (regular snapshots taken).

Simple example:

Timestamp ID 1 A 1 B 2 A 2 B 2 C 3 A 3 D 4 D 4 E In this case, item

Cgets created sometime between snapshot 1 and 2, sometime between snapshot 2 and 3BandCdisappear andDgets created, etc.The table is reasonably large (millions of records) and for each timestamp there are about 50 records.

What's the most efficient way of selecting the item

ids for items that disappear between two consecutive timestamps?So for the above example I would like to get the following:

Previous snapshot Current snapshot Removed 1 2 NULL 2 3 B, C 3 4 A If it doesn't make the query inefficient, can it be extended to automatically use the latest (i. e.

MAX) timestamp and the previous one?

We basically need to do the following things here:

- Split the table into sets grouped by timestamp

- Compare each set with the one of previous timestamp

- Find the values missing in the current set and concatenate them

This is possible to do using only the standard ANSI SQL operators, however, this will be inefficient in MySQL.

Let's create a sample table and see how to work around this:

Passing parameters in MySQL: IN list vs. temporary table

When you need to pass a list of parameters into a MySQL, there are at least two method to do this:

- Issue a dynamical query which would fill an

INlist with constant values - Fill a temporary table with these values then use it in a

JOIN

The second method is considered better for many reasons (you can easily reuse the values, it's more injection-proof etc.)

Leaving all these issues aside, let's compare these methods performance-wise.

We will create a table of 50,000 records, pass a list of 500 parameters (using both methods) and see which is faster:

Hierarchical queries in MySQL: VARCHAR keys

Comments enabled. I *really* need your comment

Answering questions asked on the site.

Basit asks:

I'm trying to implement a hierarchical query in MySQL using solutions you posted in your article.

How can I make the tree menu if the

idis uniqueVARCHAR, like the ones used on youtube?

We will need to fix the query and the function just a little:

- The function should return a

VARCHAR - The

@start_withand other variables should be initialized withVARCHARs - The initial condition for

_idshould be a minimalVARCHARpossible, i. e. an empty string

Let's create a sample table and check how it works:

CREATE TABLE filler (

id INT NOT NULL PRIMARY KEY AUTO_INCREMENT

) ENGINE=Memory;

CREATE TABLE t_key (

id INT NOT NULL PRIMARY KEY,

string_id VARCHAR(32) NOT NULL

) ENGINE=Memory;

CREATE TABLE t_hierarchy (

id VARCHAR(32) NOT NULL PRIMARY KEY,

parent VARCHAR(32) NOT NULL,

data VARCHAR(50) NOT NULL,

KEY ix_hierarchy_parent (parent)

) ENGINE=InnoDB DEFAULT CHARSET=utf8;

DELIMITER $$

CREATE PROCEDURE prc_filler(cnt INT)

BEGIN

DECLARE _cnt INT;

SET _cnt = 1;

WHILE _cnt <= cnt DO

INSERT

INTO filler

SELECT _cnt;

SET _cnt = _cnt + 1;

END WHILE;

END

$$

DELIMITER ;

START TRANSACTION;

CALL prc_filler(20);

COMMIT;

INSERT

INTO t_key

SELECT id,

(

SELECT GROUP_CONCAT(CHAR(65 + FLOOR(RAND(20090817) * 25)) SEPARATOR '')

FROM (

SELECT NULL

FROM filler

LIMIT 8

) q

)

FROM filler;

INSERT

INTO t_hierarchy

SELECT ki.string_id, COALESCE(kp.string_id, '_'), CONCAT('Row ', f.id)

FROM filler f

JOIN t_key ki

ON ki.id = f.id

LEFT JOIN

t_key kp

ON kp.id = f.id DIV 3;

Here's the function:

CREATE FUNCTION hierarchy_connect_by_parent_eq_prior_id(value VARCHAR(32))

RETURNS VARCHAR(32)

NOT DETERMINISTIC

READS SQL DATA

BEGIN

DECLARE _id VARCHAR(32);

DECLARE _parent VARCHAR(32);

DECLARE CONTINUE HANDLER FOR NOT FOUND SET @id = NULL;

SET _parent = @id;

SET _id = '';

IF @id IS NULL THEN

RETURN NULL;

END IF;

LOOP

SELECT MIN(id)

INTO @id

FROM t_hierarchy

WHERE parent = _parent

AND id > _id;

IF @id IS NOT NULL OR _parent = @start_with THEN

SET @level = @level + 1;

RETURN @id;

END IF;

SET @level := @level - 1;

SELECT id, parent

INTO _id, _parent

FROM t_hierarchy

WHERE id = _parent;

END LOOP;

END

$$

And here's the query:

SELECT CONCAT(REPEAT(' ', level - 1), CAST(hi.id AS CHAR)) AS treeitem, parent, level

FROM (

SELECT hierarchy_connect_by_parent_eq_prior_id(id) AS id, @level AS level

FROM (

SELECT @start_with := '_',

@id := @start_with,

@level := 0

) vars, t_hierarchy

WHERE @id IS NOT NULL

) ho

JOIN t_hierarchy hi

ON hi.id = ho.id

| treeitem | parent | level | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| FDBVDDGT | _ | 1 | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| ENCYPEWC | FDBVDDGT | 2 | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| AJXOXCQA | ENCYPEWC | 3 | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| CLYLKREW | ENCYPEWC | 3 | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| YFCUVVRX | ENCYPEWC | 3 | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| JVDDGUJJ | FDBVDDGT | 2 | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| ELQWNYGL | JVDDGUJJ | 3 | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| KTRBGCQA | JVDDGUJJ | 3 | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| SCGYDUQU | JVDDGUJJ | 3 | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| VEHXSYRL | FDBVDDGT | 2 | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| BIKCFVOH | VEHXSYRL | 3 | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| HAGFGSUW | VEHXSYRL | 3 | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| TCBYTARM | VEHXSYRL | 3 | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| GVPJESCI | _ | 1 | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| HCNHAEWW | GVPJESCI | 2 | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| KNIDSGBM | GVPJESCI | 2 | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| SJPBIMNF | GVPJESCI | 2 | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| JETHGIVJ | SJPBIMNF | 3 | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| OBPXSXJF | SJPBIMNF | 3 | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| VPOXBLBW | SJPBIMNF | 3 | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| 20 rows fetched in 0.0006s (0.0180s) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| id | select_type | table | type | possible_keys | key | key_len | ref | rows | filtered | Extra |

|---|---|---|---|---|---|---|---|---|---|---|

| 1 | PRIMARY | <derived2> | ALL | 20 | 100.00 | |||||

| 1 | PRIMARY | hi | eq_ref | PRIMARY | PRIMARY | 98 | ho.id | 1 | 100.00 | |

| 2 | DERIVED | <derived3> | system | 1 | 100.00 | |||||

| 2 | DERIVED | t_hierarchy | index | PRIMARY | 98 | 20 | 100.00 | Using where; Using index | ||

| 3 | DERIVED | No tables used |

Works just fine.

Subscribe in a reader

Subscribe in a reader