Archive for the ‘MySQL’ Category

Index search time depends on the value being searched

Comments enabled. I *really* need your comment

Answering questions asked on the site.

Daniel asks:

I have a table which stores track titles in a

VARCHAR(200)field. The field is indexed, but searching for titles beginning with a letter Z is noticeably slower than for those beginning with A, and the closer the initial letter is to Z, the slower is the query.My understanding is that a full table scan occurs, but

EXPLAINshows that the index is used. Besides, the table is quite large but the query is still reasonably fast.Could you please explain this behavior?

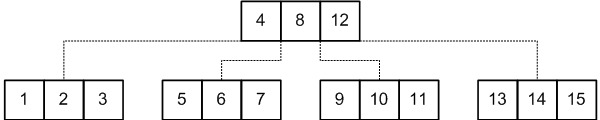

MySQL stores its indexes in B-Tree data structures.

The Wikipedia link above explains the structure quite well so I won't repeat it. I'll rather draw a picture similar to the one in the article:

This picture is quite self-explanatory. The records are sorted in a tree order, so if you are searching for a certain value, say, 11, you, starting from the first page, should find the link to follow. To do this, you need to find the pair of values less than and greater than 11. In this case, you should follow the link which is between 8 and 12. Then you search for the next pair, etc, until you find your value or reach the end and make sure that your value is not there.

Following the links is quite simple, but how does the engine search for the values within one page?

This depends on how you declared the table.

MyISAM supports two algorithms for storing the index keys in a page: packed keys and unpacked keys.

Read the rest of this entry »

Join on overlapping date ranges

Comments enabled. I *really* need your comment

This post is inspired by a discussion with John Didion:

Is there any way to optimize the query for overlapping ranges in MySQL if both ranges are dynamic?

I have two tables, each with integer range columns (specified as

LineString), and I want to find rows that overlap.No matter what I try, the query planner never uses any indexes.

This question addresses a well-known problem of efficient searching for the intersecting intervals. The queries that deal with it require ability to search for the intervals (stored in two distinct columns) containing a constant scalar value.

Plain B-Tree indexes used by most databases do not speed up the queries like that. However, MySQL supports SPATIAL indexes that can index two-dimensional shapes and efficiently search for the shapes containing a given point.

With a little effort, time intervals can be converted into the geometrical objects, indexed with a SPATIAL index and searched for the given point in time (also presented as a gemetrical object). This is described in the article about overlapping ranges in MySQL.

The query described in that article, however, searches for the intervals overlapping a constant range, provided as a parameter to the query. Now, I will discuss how to adapt the query for a JOIN between two tables.

Let's create two sample tables, each containing a set of time ranges stored as geometrical objects, and find all records from both tables whose ranges overlap:

Read the rest of this entry »

Aggregates: subqueries vs. GROUP BY

From Stack Overflow:

I have a table

usersand there is a fieldinvited_by_idshowing user id of the person who invited this user.I need to make a MySQL query returning rows with all the fields from users plus a

invites_countfield showing how many people were invited by each user.

The task seems very simple (and it is in fact), but there are at least two approaches to do it. It this article, I will discuss the benefits and the drawbacks of each approach.

GROUP BY

The first approach is using GROUP BY:

SELECT u.*, COUNT(ui.id)

FROM users u

LEFT JOIN

users ui

ON ui.invited_by = u.id

GROUP BY

u.id

This is a GROUP BY with a self join, very simple. There are only two little things I'd like to pay some attention to.

First, we need to select all users, even those who invited no other members. An inner join would leave them out, so we use a LEFT JOIN, and use COUNT(ui.id) instead of COUNT(*), because, due to the very nature of aggregation, COUNT(*) returns at least 1 in a query with GROUP BY, and COUNT(ui.id) skips NULLs (which can only result from a LEFT JOIN miss).

Second, we group by u.id but use u.* in the SELECT clause. Every other engine would fail in this case, but MySQL allows selecting fields that are neighter grouped by nor aggregated. These fields will return an arbitrary value from any of the aggregated records (in practice, that is the record first read in its group).

This behavior is often abused, since MySQL does not guarantee what exactly it will return, but it's perfectly valid for the queries like ours. We don't need to clutter the GROUP BY clause with all fields from users if we have already grouped by the PRIMARY KEY which is already unique. All other values from users are uniquely defined by the PRIMARY KEY so there is no matter which arbitrary record will the query use to return ungrouped values: they are all same within the group.

Subquery

This solution involves correlated subqueries:

SELECT u.*,

(

SELECT COUNT(*)

FROM users ui

WHERE ui.invited_by = u.id

)

FROM users u

Here, we calculate the COUNT(*) in a correlated subquery. This query returns exactly same records as the previous one.

Comparison

Let's create two sample tables (a MyISAM one and an InnoDB one) and see which solution is more efficient for different scenarios:

Read the rest of this entry »

Latest visitors

Comments enabled. I *really* need your comment

From Stack Overflow:

Say you want to display the latest visitors on a users profile page.

How would you structure this?

Perhaps a table called

uservisitors:

uservisitors userid visitorid time And how would you select this with MySQL without any duplicates?

What I mean is if user 1 visits user 2's profile, then 5 minutes later visits it again, I don't want to show both entries: only the latest.

There are two approaches to this.

First one would be just aggregating the visits, finding the max time and ordering on it. Something like this:

SELECT visitorid, MAX(time) AS lastvisit FROM uservisitors WHERE userid = 1 GROUP BY userid, visitorid ORDER BY lastvisit DESC LIMIT 5

However, there is a little problem with this solution.

Despite the fact that MySQL (with proper indexing) uses INDEX FOR GROUP-BY optimization for this query, it will still have to sort on MAX(time) to find 5 latest records.

This will require sorting the whole resultset which will be huge if the service is heavily loaded.

Let's test it on a sample table:

Read the rest of this entry »

Linked lists in MySQL: multiple ordering

Comments enabled. I *really* need your comment

Answering questions asked on the site.

Rick McIntosh asks:

I have two tables, one with a list of spreadsheets (

a) and the other with a list of the column headings that show in the spreadsheets (b).

a id parent 1 2 2 0

b id parent aid 1 1 1 2 0 1 3 2 1 4 6 2 5 4 2 6 0 2 I want to bring the columns back in first the order of the spreadsheets as defined by the

a.parent_idthen ordered asb.parent_id:

id parent aid aparent 6 0 2 0 4 6 2 0 5 4 2 0 2 0 1 2 3 2 1 2 1 1 1 2

This can be done using the same recursion technique as the one that was used to build a simple linked list.

MySQL does not support recursion directly, but it can be emulated using subquery calls in the SELECT clause of the query, using session variables to store the recursion state.

In this case we need to do the following:

- Generate a dummy recordset for recursion that would contain as many rows as the resulting recordset. This is best done by issuing a

JOINon theFOREIGN KEY, without any ordering. The values of the recordset will not be used in the actual query. - Initialize

@aand@bto be the first value ofaand a 0, accordingly. - In the loop, make a query that would return the next item of

bfor the current value of@a, or, should it fail, return the first item ofbfor the next@a. This is best done using aUNION ALLwith aLIMIT. - Adjust

@aso that is points to the correct item: just select the appropriate value fromb

Let's create a sample table:

Read the rest of this entry »

MySQL: Selecting records holding group-wise maximum (resolving ties)

Continuing the series on selecting records holding group-wise maximums.

The approach shown in the previous article is quite efficient, however, it can only be relied upon if the column being maximized is unique.

If the column is not unique (even along with the grouping column), the ties are possible: multiple records can satisfy the condition of holding a group-wise maximum

.

In this article, I'll show how to resolve the ties.

Let's recreate the table we used in the previous article:

Read the rest of this entry »

MySQL: Selecting records holding group-wise maximum (on a unique column)

Answering questions asked on the site.

Juan asks:

Regarding this question:

I would like to know what database is the most efficient when performing this type of query and if the solutions proposed are already the most efficient.

I am using MySQL but I would like to know if PostgreSQL, Oracle, Microsoft SQL Server or DB2 are much more efficient.

A nice question, this is a common problem for most developers.

For those too lazy to follow the link, the problem in one sentence:

How do I select the whole records, grouped on

grouperand holding a group-wise maximum (or minimum) on other column?

I've already covered this in passing in various previous articles in my blog, and now I'll try to make a little summary.

According to the SQL standards, every expression used in the SELECT list of a query that deals with GROUP BY should be either grouped by (that is, used in the GROUP BY) or aggregated.

The reason behind this is quite logical: a grouping query takes the records that have something in common and shrinks them, making a single record out of the whole set constituting the group.

The grouping expressions, of course, share their values across all the records constituting the group (since they are what that makes the group a group), and hence can be used as is.

Other expressions can vary and therefore some algorithm should be applied for shrinking all existing values into a single value. This is what aggregating function does.

The problem is that the the aggregating functions are not interdependent: you cannot use the result of one function as an input to another one. Hence, you cannot select a record in an aggregate query: you can only select a tuple of aggregated values. It's possible that each of these aggregated values in fact can never even found among the values of the original records. Say, with all values of column being strictly positive, you can never find a record holding a SUM(column): it will be greater than any of the values of column.

However, with MIN or MAX, it is guaranteed that at least one record holds the non-NULL value returned by these aggregate functions. The problem is to return the whole record without aggregating the other columns.

As SQL evolved, different solutions had been proposed to solve this problem, and I'll try to cover these solutions in this series of articles.

First, I'll cover MySQL (as per request)

MySQL's dialect of SQL is much more poor than those used by the other systems. That's why most of the solutions used by the other systems, like analytic functions, DISTINCT ON etc. cannot be used in MySQL.

However, MySQL still provides some ways to make the queries that solve this task more efficient.

Let's create a sample table:

Read the rest of this entry »

Selecting most relevant row

Comments enabled. I *really* need your comment

From Stack Overflow:

I have whole bunch of contact data that may or may not contain all info, like this:

Name Address1 Address2 Test User Address1 Address2 test@example.com NULL NULL NULL test@example.com For each email address, I would love to extract the most relevant record, that is the row with the contact information rather than the row with empty fields

To do this we need a sorting criterion: how to tell that a record is considered more relevant

than another record. We can use the number of the fields filled as a criterion.

Let's create a sample table:

Inverting date ranges: MySQL

Answering questions asked on the site.

Maxym asks:

Inverting date ranges is an interesting article.

I had the same problem, and I solved it almost the same way in PostgreSQL. Your solution is great! The problem is to implement it on MySQL :) And of course performance there.

Do you have any idea how to transform this query for MySQL?

The query in question regards inverting contiguous non-overlapping date ranges: transforming them so the the gaps become the ranges and the ranges become the gaps.

The following set:

| StartDate | EndDate |

|---|---|

| 2009-11-12 | 2009-11-13 |

| 2009-11-15 | 2009-11-19 |

should become this:

| StartDate | EndDate |

|---|---|

NULL |

2009-11-12 |

| 2009-11-13 | 2009-11-15 |

| 2009-11-19 | NULL |

The link above shows how to make this query in SQL Server and can be applied to Oracle and PostgreSQL 8.4 as well.

However, the query in question relies on FULL OUTER JOIN and ROW_NUMBER. MySQL lacks both of these.

To implement this query in MySQL we need to use a certain workaround.

Let's create a sample table:

Read the rest of this entry »

MySQL: average of sums using indexes

Comments enabled. I *really* need your comment

From Stack Overflow:

My table looks like:

person_id car_id miles 1 1 100 1 2 200 2 3 1000 2 4 500 I need to total the miles for each person and then average those totals.

This is a good question to demonstrate how to improve MySQL queries using covering indexes.

The query should return a single value, which is SUM(miles) / COUNT(DISTINCT person_id). Since this query does not relate the fields to each other (only total sum and total count matter), it could be performed using only the indexes on miles and person_id. However, MySQL is not able to do this by default and it requires a little help from developer's side.

Let's create a sample table:

This table has 1,000,000 rows with 1,000 distinct person_id's. There are indexes on miles and person_id.

A plain query would look like this:

SELECT SUM(miles) / COUNT(DISTINCT person_id) AS total FROM persons

| total | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 4998778.6000 | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| 1 row fetched in 0.0001s (2.1468s) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| id | select_type | table | type | possible_keys | key | key_len | ref | rows | filtered | Extra |

|---|---|---|---|---|---|---|---|---|---|---|

| 1 | SIMPLE | persons | ALL | 1000066 | 100.00 |

select (sum(`20091110_indexes`.`persons`.`miles`) / count(distinct `20091110_indexes`.`persons`.`person_id`)) AS `total` from `20091110_indexes`.`persons`

The query takes more than 2 seconds.

If we look into the query plan we will see that a full table scan is used. The query needs both the values of person_id and miles and no index contains both values. MySQL browses all table records which are quite large.

But despite the fact that the query needs both values, it does not need to correspond the values. It may calculate the SUM first (using an index on miles), and COUNT(DISTINCT person_id) in another query. Since index records are much less in size that the table records, the separate aggregates on them will be calculated much faster.

And we can improve the query even more by replacing COUNT(DISTINCT person_id) with a COUNT over DISTINCT person_id in a subquery. The latter query will use INDEX FOR GROUP BY and therefore be almost instant.

Here's how the new improved query would look like:

SELECT SUM(miles) / ( SELECT COUNT(*) FROM ( SELECT DISTINCT person_id FROM persons ) q ) AS total FROM persons

| total | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 4998778.6000 | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| 1 row fetched in 0.0001s (0.7344s) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| id | select_type | table | type | possible_keys | key | key_len | ref | rows | filtered | Extra |

|---|---|---|---|---|---|---|---|---|---|---|

| 1 | PRIMARY | persons | index | ix_persons_miles | 4 | 1000066 | 100.00 | Using index | ||

| 2 | SUBQUERY | Select tables optimized away | ||||||||

| 3 | DERIVED | persons | range | ix_persons_person | 4 | 2021 | 100.00 | Using index for group-by |

select (sum(`20091110_indexes`.`persons`.`miles`) / (select count(0) AS `COUNT(*)` from (select distinct `20091110_indexes`.`persons`.`person_id` AS `person_id` from `20091110_indexes`.`persons`) `q`)) AS `total` from `20091110_indexes`.`persons`

This query uses separate indexes to calculate the aggregate values, and by using INDEX FOR GROUP-BY, COUNT(DISTINCT person_id) is calculated almost instantly.

Overall query performance is 750 ms, or thrice as fast as the original query.

Subscribe in a reader

Subscribe in a reader