Questions

I had tons of work the last month and got a whole bunch of questions in the queue which I haven't answered yet.

Pawel, Mark, Kate, Felix, Andrew, Ludwig, Santos, Jeremy, John, another John, Anjan and Dave — I do remember about you, guys!

Will try to resume the normal blogging schedule the next week.

Stay subscribed!

Indexing for ORDER BY / LIMIT

Comments enabled. I *really* need your comment

Answering questions asked on the site.

Frode Underhill asks:

I have some applications that are logging to a MySQL database table.

The table is pretty standard on the form:

time

BIGINT(20)source

TINYINT(4)severity

ENUMtext

VARCHAR(255), where

sourceidentifies the system that generated the log entry.There are very many entries in the table (>100 million), of which 99.9999% are debug or info messages.

I'm making an interface for browsing this log, which means I'll be doing queries like

SELECT * FROM log WHERE source = 2 AND severity IN (1,2) AND time > 12345 ORDER BY time ASC LIMIT 30, if I want to find debug or info log entries from a certain point in time, or

SELECT * FROM log WHERE source = 2 AND severity IN (1,2) AND time < 12345 ORDER BY time DESC LIMIT 30for finding entries right before a certain time.

How would one go about indexing & querying such a table?

I thought I had it figured out (I pretty much just tried every different combination of columns in an index), but there's always some set of parameters that results in a really slow query.

The problem is that you cannot use a single index both for filtering and ordering if you have a ranged condition (severity IN (1, 2) in this case).

Recently I wrote an article with a proposal to improve SQL optimizer to handle these conditions. If a range has low cardinality (this is, there are few values that con possibly satisfy the range), then the query could be improved by rewriting the range as a series of individual queries, each one using one of the values constituting the range in an equijoin:

No optimizers can handle this condition automatically yet, so we'll need to emulate it.

Since the severity field is defined as an enum with only 5 values possible, any range condition on this field can be satisfied by no more than 5 distinct values, thus making this table ideal for rewriting the query.

Let's create a sample table:

Read the rest of this entry »

GROUP_CONCAT in SQL Server

I'm finally back from my vacation. Tunisia's great: dates, Carthage, sea and stuff.

Now, to the questions.

Mahen asks:

Create a table called

Group:

Group id prodname 1 X 1 Y 1 Z 2 A 2 B 2 C The resultset should look like this:

id prodname 1 X,Y,Z 2 A,B,C Can you please help me to solve the above problem using a recursive CTE?

This is out good old friend, GROUP_CONCAT. It's an aggregate function that returns all strings within a group, concatenated. It's somewhat different from the other aggregate functions, because, first, dealing with the concatenated string can be quite a tedious task for the groups with lots of records (large strings tend to overflow), and, second, the result depends on the order of the arguments (which is normally not the case for the aggregate functions). It's not a part of a standard SQL and as for now is implemented only by MySQL with some extra vendor-specific keywords (like ORDER BY within the argument list).

This functionality, however, is often asked for and I have written some articles about implementing this in PostgreSQL and Oracle.

Now, let's see how to do it in SQL Server.

Usually, SQL Server's FOR XML clause is exploited to concatenate the strings. To do this, we obtain a list of group identifiers and for each group, retrieve all it's product names with a subquery appended with FOR XML PATH(''). This makes a single XML column out of the recordset:

Read the rest of this entry »

LEFT JOIN / IS NULL vs. NOT IN vs. NOT EXISTS: nullable columns

In one of the previous articles I discussed performance of the three methods to implement an anti-join in MySQL.

Just a quick reminder: an anti-join is an operation that returns all records from one table which share a value of a certain column with no records from another table.

In SQL, there are at least three methods to implement it:

LEFT JOIN / IS NULL

SELECT o.* FROM outer o LEFT JOIN inner i ON i.value = o.value WHERE i.value IS NULL

NOT IN

SELECT o.* FROM outer o WHERE o.value NOT IN ( SELECT value FROM inner )

NOT EXISTS

SELECT o.* FROM outer o WHERE NOT EXISTS ( SELECT NULL FROM inner i WHERE i.value = o.value )

When inner.value is marked as NOT NULL, all these queries are semantically equivalent and with proper indexing have similarly optimized execution plans in MySQL.

Now, what if inner.value is not nullable and does contain some NULL values?

Let's create some sample tables:

Read the rest of this entry »

Things SQL needs: determining range cardinality

What is the problem with this query?

SELECT *

FROM orders

WHERE quantity <= 4

AND urgency <= 4

The problem is indexing strategy, of course. Which columns should we index?

If we index quantity, the optimizer will be able to use the index to filter on it. However, filtering on urgency will require scanning all records with quantity < 4 and applying the urgency filter to each record found.

Same with urgency. We can use range access on urgency using an index, but this will require filtering on quantity.

Why, create a composite index!

, some will say.

Unfortunately, that won't help much.

A composite B-Tree index maintains what is called a lexicographical order of the records. This means that an index on (quantity, urgency) will sort on quantity, and only if the quantities are equal, it will take the urgency into account.

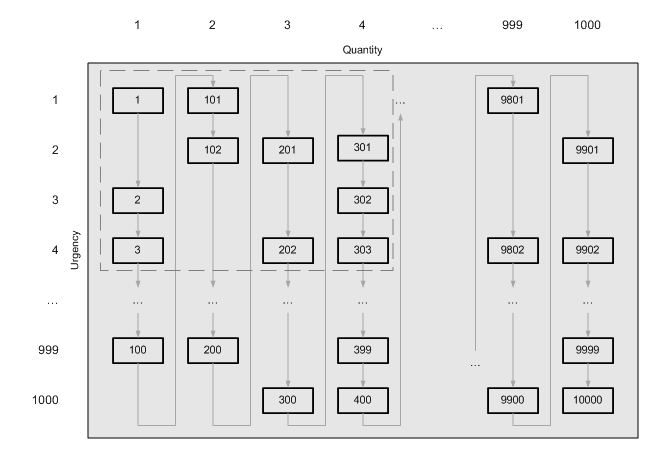

The picture below shows how would the records be ordered in such an index:

As we can see, with a single index range scan (i. e. just following the arrows) we cannot select only the records within the dashed rectangle. There is no single index range that could be used to filter on both columns.

Even if we changed the field order in the index, it would just change the direction of the arrows connecting the records:

Read the rest of this entry »

MAX and MIN on a composite index

Comments enabled. I *really* need your comment

Answering questions asked on the site.

Ivo Radev asks:

I am trying to make a very simple query.

We have a log table which different machines write to. Given the machine list, I need to find the latest log timestamp.

Currently, the query looks like this:

SELECT MAX(log_time) FROM log_table WHERE log_machine IN ($machines), and I pass the comma-separated list of

$machinesfrom PHP.The weird thing is that the query is literally instant when there is only one machine (any) in the list but slow when there are multiple machines.

I'm considering doing it in separate queries and then process the results in PHP. However I'd like to know if there is a fast solution in MySQL.

Most probably, there is a composite index on (log_machine, log_time) which is being used for the query.

Usually, a query like this:

SELECT MAX(log_time) FROM log_table

on the indexed field log_time can be served with a single index seek on the index.

Indeed, the MAX(log_time), by definition, is the latest entry in the index order, and can be fetched merely by finding the trailing index entry. It's a matter of several page reads in the B-Tree, each one following the rightmost link to the lower-level page.

Similarly, this query:

SELECT MAX(log_time) FROM log_table WHERE log_machine = $my_machine

can be served with a single index seek too. However, the index should include log_machine as a leading column.

In this case, a set of records satisfying the WHERE clause of the query is represented by a single logically continuous block of records in the index, each one sharing the same value of log_machine. MAX(log_time) will of course be held by the last record in this block. MySQL just finds that last record and takes the log_time out of it.

Now, what if we have a multiple condition on log_machine?

Read the rest of this entry »

Things SQL needs: MERGE JOIN that would seek

One of the most known and least used join algorithms in SQL engines is MERGE JOIN.

This algorithm operates on two sorted recordsets, keeping two pointers that chase each other.

The Wikipedia entry above describes it quite well in terms of algorithms. I'll just make an animated GIF to make it more clear:

This is quite a nice and elegant algorithm, which, unfortunately, has two major drawbacks:

- It needs the recordsets to be sorted

- Even with the recordsets sorted, it is no better than a

HASH JOIN

The sorting part is essential for this algorithm and there is nothing that can be done with it: the recordsets should be sorted, period. Databases, however, often provide the records in the sorted order: from clustered tables, indexes, previously sorted and ordered subqueries, spool tables etc.

But even when the recordsets are already sorted, on equijoins the MERGE JOIN is hardly faster than a HASH JOIN.

Groups holding highest ranked items

Comments enabled. I *really* need your comment

Answering questions asked on the site.

Nate asks:

I know you've addressed similar issues related to the

greatest-per-groupquery but this seems to be a different take on that.Example table:

t_group item_id group_id score 100 1 2 100 2 3 200 1 1 300 1 4 300 2 2 Each item may be in multiple groups. Each instance of an item in that group is given a score (how relevant it is the the group).

So given the data above, when querying for group 1 it should return items 200 and 300 (item 100's highest score is for group 2, so it's excluded).

The classical greatest-n-per-group

problem requires selecting a single record from each group holding a group-wise maximum. This case is a little bit different: for a given group, we need to select all records holding an item-wise maximum.

Let's create a sample table:

Read the rest of this entry »

Hierarchical query in MySQL: limiting parents

Answering questions asked on the site.

James asks:

Your series on hierarchical queries in MySQL is tremendous! I'm using it to create a series of threaded conversations.

I'm wondering if there is a way to paginate these results.

Specifically, let's say I want to limit the conversations to return 10 root nodes (

parent=0) and all of their children in a query.I can't just limit the final query, because that will clip off children. I've tried to add

LIMITs to your stored functions, but I'm not getting the magic just right.How would you go about doing this?

A quick reminder: MySQL does not support recursion (either CONNECT BY style or recursive CTE style), so using an adjacency list model is a somewhat complicated task.

However, it is still possible. The main idea is storing the recursion state in a session variable and call a user-defined function repeatedly to iterate over the tree, thus emulating recursion. The article mentioned in the question shows how to do that.

Normally, reading and assigning session variables in the same query is discouraged in MySQL, since the order of evaluation is not guaranteed. However, in the case we only use the table as a dummy recordset and no values of the records are actually used in the function, so the actual values returned by the function are completely defined by the function itself. The table is only used to ensure that the function is called enough times, and to present its results in form of a native resultset (which can be returned or joined with).

To do something with the logic of the function (like, imposing a limit on the parent nodes without doing the same on the child nodes), we, therefore, should tweak the function code, not the query that calls the functions. The only thing that matters in such a query is the number of records returned and we don't know it in design time.

Limiting the parent nodes is quite simple: we just use another session variable to track the number of parent branches yet to be returned and stop processing as soon as the limit is hit, that is the variable becomes zero.

Let's create a sample table and see how to do this:

Read the rest of this entry »

Date ranges: overlapping with priority

Comments enabled. I *really* need your comment

Answering questions asked on the site.

Jason Foster asks:

We have a table of student registrations:

Students student_code course_code course_section session_cd 987654321 ESC102H1 Y 20085 998766543 ELEE203H F 20085

course_codeandcourse_sectionidentify a course,session_cdis an academic session, e. g. 20085, 20091, 20079.The courses (stored in another table) have associated values for

engineering design,complementary studies, etc., like that:

Courses course_code course_section start_session end_session design science studies ESC102H1 F 20071 20099 10 0 0 AER201Y1 Y 20059 NULL 0 0 30 , or like that:

In-house courses course_code course_section student_code design science studies ESC102H1 F 998766543 10 0 0 We are required by an external accreditation body to add up all of the units of

engineering design,complementary studies, etc., taken by an individual student.Where it gets really messy is that we have multiple data feeds for the associated values of courses. For example we have a set from the Registrar's Office, the Civil Department, our In-House version, etc.

The rule is that In-House beats Civil beats the Registrar's Office in the case of any duplication within the overlapping intervals.

The

session_cdis of the formYYYY{1,5,9}.

Basically, we have three sets here.

To get the course hours for a given student we should find a record for him in the in-house set, or, failing that, find if the session is within the ranges of one of the external sets (Civil or Registrar). If both ranges contain the academic session the student took the course, Civil should be taken.

The first part is quite simple: we just LEFT JOIN students with the in-house courses and get the hours for the courses which are filled. The real problem is the next part: searching for the ranges containing a given value.

As I already mentioned in the previous posts, relational databases are not in general that efficient for the queries like that. It's easy to use an index to find a value of a column within a given range, but B-Tree indexes are of little help in searching for a range of two columns containing a given value.

However, in this case, the data domain of session_cd is quite a limited set. For each pair of session_start and session_end it is easy to create a set of all possible values between session_start and session_end.

The overlapping parts of the session ranges from the two sets will yields two records for each of the sessions belonging to the range. Of these two records we will need to take the relevant one (that is Civil) by using DISTINCT ON with the additional sorting on the source (Civil goes first).

Then we just join the relevant records to the subset of the students which does not have corresponding records in the in-house version.

Finally, we need to union this with the in-house recordset.

Read the rest of this entry »

Subscribe in a reader

Subscribe in a reader