Author Archive

Score charts, friendship lists and unique multiple columns: easy with SQL Server’s indexed views

Comments enabled. I *really* need your comment

SQL Server supports a really useful feature called indexed views. This means it lets you materialize results of some queries and build indexes over them. It’s as if you haven’t actually run the query yet - but it’s already complete, stored conveniently on the disk for you, ordered and waiting for you to read its results. Not all queries are eligible but some quite often used by the database developers are.

In this series, I'll describe three scenarios which could really benefit from using indexed views.

Scenario One: Unique multiple columns

Assume you are building a database for multiplayer online tic-tac-toe tournaments. The first thing you’d want would be recording game results: who played with whom and who won. This can be easily achieved with a simple layout:

| id | round | x | o | winner | start | duration |

|---|

Now we have additional requirement: no person should be able to play in a tournament more than once. How do we do that?

Read the rest of this entry on tech.pro »

SQL Server: joining NULL values

From Stack Overflow:

UPDATE n SET AddressID = a.AddressID FROM #NewAddress n JOIN dbo.Address a ON (a.[LineA] = n.[LineA] OR (a.[LineA] is null AND n.[LineA] is null)) AND (a.[LineB] = n.[LineB] OR (a.[LineB] is null AND n.[LineB] is null)) AND (a.[LineC] = n.[LineC] OR (a.[LineC] is null AND n.[LineC] is null)) WHERE n.Processed = 0Any ideas on how to UNION or EXISTS this query? This seems to be a pretty common join condition I'm encountering.

Here's a JOIN condition: we should join two tables on a common key, also treating NULL values as equal. Normal equality conditions don't work here: in SQL, NULL values are not considered equal (strictly speaking, their equality is a boolean NULL rather than true or false).

ANSI standard defines a special predicate for that: IS NOT DISTINCT FROM, which is supported by PostgreSQL. This treats values the same value as DISTINCT does: two equal values, as well as two NULL values, are considered not distinct. MySQL also supports similar operator, <=>. Unfortunately, SQL Server supports neither.

In SQL Server, we can emulate this by using set operators which distinctify the queries. Set operators work on sets, so we would need some kind of a SELECT query for them. This would also help us to overcome SQL Server's inability to compare tuples directly. Basically, we need to tell if two tuples: (a.lineA, a.lineB, a.lineC) and (n.lineA, n.lineB, n.lineC) are distinct or not.

We can do that by selecting those tuples in dummy queries (those without a FROM clause), intersecting those queries (which would give us a one-record or zero-record resultset, depending on whether the two tuples are distinct or not) and applying EXISTS to the resultset. EXISTS is a boolean predicate and as such can serve as a join condition.

Let's check it. We'll create two tables, fill them with random values (including occasional NULLs here and there):

INSERT … SELECT … OUTPUT …: returning source table data

From Stack Overflow:

I am trying to insert into the target table values from the source table, but also insert the primary key of the source table into the tracking table.

INSERT INTO TRN_TEMP (TRN_TRAN_DATE, TRN_DESCRIPTION, TRN_AMT) OUTPUT D.DET_PRIMARY, GETDATE() INTO REGISTER (DET_PRIMARY_LINK, INS_DATE) SELECT D.DET_DATE, 'SOMETEXT', SUM(D.DET_NET) FROM DETAIL D LEFT JOIN REGISTER R ON R.DET_PRIMARY_LINK = D.DET_PRIMARY WHERE R.LINE_ID IS NULL -- TO REMOVE LINES ALREADY PROCESSED GROUP BY D.DET_DATEI can't see a problem with the text above but I get an error:

The multi part identifier 'D.DET_PRIMARY' could not be bound.I've tried both

D.DET_DETAILandDETAIL.DET_DETAILand the error is the same.

This is a limitation of SQL Server which can be easily worked around by substituting MERGE instead of INSERT.

Let's create some sample tables and see:

CREATE TABLE source ( id INT NOT NULL PRIMARY KEY, grouper INT NOT NULL, value INT NOT NULL ) CREATE TABLE destination ( id INT NOT NULL IDENTITY PRIMARY KEY, grouper INT NOT NULL, aggregated_value INT NOT NULL ) CREATE TABLE register ( dest_id INT NOT NULL PRIMARY KEY, max_source_id INT NOT NULL UNIQUE, grouper INT NOT NULL UNIQUE, regdate DATE NOT NULL, ) INSERT INTO source VALUES (1, 1, 5), (2, 1, 2), (3, 2, 6), (4, 2, 8)

, and run the query:

MERGE INTO destination d USING ( SELECT grouper, SUM(value), MAX(id) FROM source WHERE grouper NOT IN ( SELECT grouper FROM register ) GROUP BY grouper ) s (grouper, aggregated_value, max_source_id) ON (1 = 0) WHEN NOT MATCHED THEN INSERT (grouper, aggregated_value) VALUES (grouper, aggregated_value) OUTPUT INSERTED.id, s.max_source_id, s.grouper, GETDATE() INTO register; SELECT * FROM register;

You can try it yourself on SQL Fiddle.

As you can see, we are using both destination table's INSERTED.id, source table's s.max_source_id, s.grouper and an arbitrary expression GETDATE() in the OUTPUT clause which works just alright.

An alert reader may notice that there is another problem with the original query, but this will be covered in the next post. Watch this space!

Empty row if condition does not match

Just found that in a Google referer to the blog:

I want SQL to return blank row even if the condition does not match

This may be useful for certain ORMs which always expect a single row as a result of a query.

Say, we have a query like that:

SELECT * FROM mytable WHERE id = 42

and want it to return a single row (possibly consisting of NULL values) no matter what.

If we had a join and the condition in the ON clause:

SELECT m.* FROM values v JOIN mytable m ON m.id = v.value

, we could just rewrite an INNER JOIN to a LEFT JOIN.

SELECT m.* FROM values v LEFT JOIN mytable m ON m.id = v.value

This way, we would have at least one record returned for each entry in values.

In our original query we don't have a table to join with. But we can easily generate it:

SELECT m.* FROM ( SELECT 42 AS value ) v LEFT JOIN mytable m ON m.id = v.value

If id is a PRIMARY KEY on mytable, this query would return exactly one record, regardless of whether it such an id exists in mytable or not.

Happy New Year: view of Earth from space in SQL

It's been a really long year.

The blog has not been updated during this year. I focused on improving my SQL skills and helping other folks to improve theirs.

During this year Explain Extended has become a team. We now do SQL consulting and database development. I'll write a post about it soon, but send me a message if you're impatient to wait.

Now, to the new year post.

New Year has always seemed like the most global thing to me. It does not happen to everyone at once. When I was a kid I wanted to go to space and see the New Year marching through the planet with my own eyes (and it's not that I don't want it anymore).

Now we have satellite images and such, but with a little effort we can see how our planet looks from space using SQL.

To do this, we need some map data, basic math and pretty simple SQL. I'll use PostgreSQL for that.

Read the rest of this entry »

Happy New Year: drawing snowflakes in SQL

This winter is anomalously warm in Europe, there is no snow and no New Year mood. So today we will be drawing a snowflake in PostgreSQL.

#1. A little theory

Core of a snowflake is six large symmetrical ice crystals growing from the common center. Out of these larger crystals other, smaller, crystals grow.

The overall shape of the snowflake is defined by how large do crystals grow and where exactly are they attached to each other.

These things are defined by fluctuations in air and temperature conditions around the snowflake. Because the flake itself is very small, in any given moment the conditions are nearly identical around each crystal, that's why the offspring crystals pop up in almost same places and grow to almost same lengths. Different flakes, though, constantly move to and from each other and are subject to very different fluctuations, and that's why they grow so unique.

Except for the root crystals (of which there are six), the child icicles grow in symmetrical pairs. More than that, each branch grows their own children (also in pairs), so on each step there are twice as many crystals, but they all share almost same length and angle. This gives the snowflake its symmetrical look.

So we can easily see that, despite the fact there may be many child crystals, the shape of a snowflake is defined by a relatively small number of parameters: how many children each crystal produces, where are they attached to it, at which angle they grow and to which length.

Now, let's try to model it.

Read the rest of this entry »

What’s UNPIVOT good for?

Answering questions asked on the site.

Karen asks:

… I've always thought

PIVOTandUNPIVOTare signs of a poorly designed database. I mean, is there a legitimate use for them if your model is OK?

I've made an actual use for them in a project I've been working on for the last several months (which is partly why there were no updates for so long!)

Part of the project is a task management system where each task has several persons related to it. There can be the creator of the task, the person the task is assigned to, the actual author of the task (on behalf of whom the task is created), and the task can be possible completed by a person or deleted by a person. A total of 5 fields related to persons.

Now, we need to take all tasks within a certain time range and list all people involved in them.

Let's create a sample table and see how would we do that.

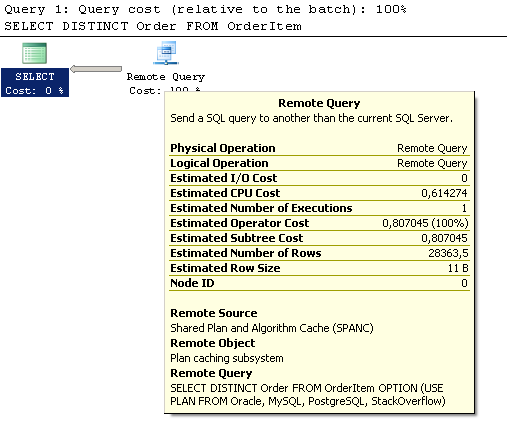

Shared Plan and Algorithm Network Cache (SPANC)

Due to the nature of my work I have to deal with various database systems.

While SQL is more or less standardized, the optimizers are implemented differently in the different systems. Some systems cannot join tables with anything other than nested loops, the other can only GROUP BY using a sort, etc.

So when you write a join in, say, MySQL, you cannot expect it to be a sort merge join (and you should consider this fact when designing the query). Or, when you write a DISTINCT in SQL Server, you can't expect a loose index scan. These are limitations put by their optimizers.

However, in the last three months I noticed a great improvement in the queries where I could not expect any.

It started when I tried to debug this in SQL Server:

SELECT DISTINCT order FROM orderItem

while yielded this plan:

Similar results were obtained on Oracle:

Plan hash value: 1345318323 --------------------------------------------------------------------------------------------------------------------------------- | Id | Operation | Name | Rows | Bytes | Cost (%CPU)| Time | --------------------------------------------------------------------------------------------------------------------------------- | 0 | SELECT STATEMENT | | | 200 | 2 (50)| 00:00:01 | | 1 | REMOTE SPANC QUERY (SQLSERVER, MYSQL, POSTGRESQL, STACKOVERFLOW) | | | 200 | 2 (50)| 00:00:01 | ---------------------------------------------------------------------------------------------------------------------------------

, MySQL:

+----+-------------+-----------+-------+---------------+---------+---------+------+---------+-----------------------------------------------------+ | id | select_type | table | type | possible_keys | key | key_len | ref | rows | Extra | +----+-------------+-----------+-------+---------------+---------+---------+------+---------+-----------------------------------------------------+ | 1 | SIMPLE | orderItem | spanc | NULL | ALL | NULL | NULL | | Using Oracle, PostgreSQL, SQL Server, StackOverflow | +----+-------------+-----------+-------+---------------+---------+---------+------+---------+-----------------------------------------------------+

and PostgreSQL:

Seq Scan on OrderItem (cost=0.00..6.44 width=4) -> Remote Scan on SPANC (Oracle, MySQL, SQL Server, StackOverflow) (cost=0.00..100.00 width=4)

Network analysis has shown weird encrypted activity between the servers in my internal network which host SQL Server, Oracle, PostgreSQL and MySQL servers.

Ultimately, there was unencrypted activity outside of the internal network which turned out to be an HTTP POST request followed by several GET polls to http://stackoverflow.com/questions/5518080/distinct-optimization.

It seems that the developers of major database systems agreed to share the knowledge about the most efficient query plans in some kind of a distributed storage (which probably is called SPANC as we can see in the query plans) and provide an interface to access each other's systems.

It also seems that these systems treat Stack Overflow as an external optimization engine where the most experienced developers can build their plans for them in a most efficient way.

I would be glad to have further clarification from the companies staff.

This also begs a question: how many of regular Stack Overflow participants are in fact query engines disguised as curious fellow developers?

It would be definitely nice to know.

MySQL: GROUP BY in UNION

From Stack Overflow:

I have a query where I have a custom developed UDF that is used to calculate whether or not certain points are within a polygon (first query in

UNION) or circular (second query inUNION) shape.SELECT a.geo_boundary_id, … FROM geo_boundary_vertex a, … … GROUP BY a.geo_boundary_id UNION SELECT b.geo_boundary_id, … FROM geo_boundary b, … … GROUP BY b.geo_boundary_idWhen I run an explain for the query I get

filesortfor both queries within theUNION.Now, I can split the queries up and use the

ORDER BY NULLtrick to get rid of thefilesorthowever when I attempt to add that to the end of aUNIONit doesn't work.How do I get rid of the

filesort?

In MySQL, GROUP BY also implies ORDER BY on the same set of expressions in the same order. That's why it adds an additional filesort operation to sort the resultset if it does not come out naturally sorted (say, from an index).

This is not always a desired behavior, and MySQL manual suggests adding ORDER BY NULL to the queries where sorting is not required. This can improve performance of the queries.

Let's create a sample table and see:

MySQL: splitting aggregate queries

Answering questions asked on the site.

Victor asks:

I have a table which I will call

saleto protect the innocent:

Sale id product price amount date I need to retrieve ultimate values of

price,amountanddatefor each product:SELECT product, MIN(price), MAX(price), MIN(amount), MAX(amount), MIN(date), MAX(date) FROM sale GROUP BY productThe query only returns about 100 records.

I have all these fields indexed (together with

product), but this still produces a full table scan over 3,000,000 records.How do I speed up this query?

To retrieve the ultimate values of the fields, MySQL would just need to make a loose index scan over each index and take the max and min value of the field for each product.

However, the optimizer won't do when multiple indexes are involved. Instead, it will revert to a full scan.

There is a workaround for it. Let's create a sample table and see them:

Subscribe in a reader

Subscribe in a reader