Archive for 2009

Recursive CTE’s: PostgreSQL

Comments enabled. I *really* need your comment

In the previous article on recursive CTE's in SQL Server I demonstrated that they are not really set-based.

SQL Server implements the recursive CTE's syntax, but forbids all operations that do not distribute over UNION ALL, and each recursive step sees only a single record from the previous step.

Now, let's check the same operations in PostgreSQL 8.4.

To do this, we well write a query that selects only the very first branch of a tree: that is, each item would be the first child of its parent. To do this, we should select the item that would be the first child of the root, the select the first child of that item etc.

This is a set-based operation.

Oracle's CONNECT BY syntax, despite being set-based, offers some limited set-based capabilities: you can use ORDER SIBLINGS BY clause to define the order in which the siblings are returned. However, this would require some additional work to efficiently return only the first branch.

In a true set-based system, this is much more simple.

Let's create a sample table:

Read the rest of this entry »

SQL Server: are the recursive CTE’s really set-based?

Comments enabled. I *really* need your comment

Answering questions asked on the site.

Karl asks:

I've read your article about adjacency lists vs. nested sets in Oracle. A nice explanation, thanks.

You write that recursive operations are row-based in Oracle and set-based in SQL Server.

Which approach is better in your opinion?

First, a quick reminder for those who have no idea what's this all about.

Recursive queries are useful to build the hierarchy trees, traverse graphs, generate arbitrary rowsets etc. Usually this involves joining a set with itself an arbitrary number of times.

A recursive query is usually defined by the anchor part (the initial rowset, or the base of recursion) and the recursive part (some operation over the previous resultset).

This is same as with the plain recursion: take some initial data as a parameter, modify it in some way and then pass the result of the modification as a new parameter to the same function.

Since SQL operates with sets, the input and the output if this function should also be the sets. And here is the main differences between the approaches used by Oracle on one side, and SQL Server, PostgreSQL and some more systems on the other side.

Oracle uses CONNECT BY approach, while SQL Server uses recursive CTE approach.

Read the rest of this entry »

Selecting most relevant row

Comments enabled. I *really* need your comment

From Stack Overflow:

I have whole bunch of contact data that may or may not contain all info, like this:

Name Address1 Address2 Test User Address1 Address2 test@example.com NULL NULL NULL test@example.com For each email address, I would love to extract the most relevant record, that is the row with the contact information rather than the row with empty fields

To do this we need a sorting criterion: how to tell that a record is considered more relevant

than another record. We can use the number of the fields filled as a criterion.

Let's create a sample table:

Inverting date ranges: MySQL

Answering questions asked on the site.

Maxym asks:

Inverting date ranges is an interesting article.

I had the same problem, and I solved it almost the same way in PostgreSQL. Your solution is great! The problem is to implement it on MySQL :) And of course performance there.

Do you have any idea how to transform this query for MySQL?

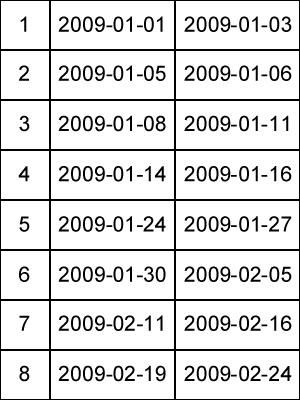

The query in question regards inverting contiguous non-overlapping date ranges: transforming them so the the gaps become the ranges and the ranges become the gaps.

The following set:

| StartDate | EndDate |

|---|---|

| 2009-11-12 | 2009-11-13 |

| 2009-11-15 | 2009-11-19 |

should become this:

| StartDate | EndDate |

|---|---|

NULL |

2009-11-12 |

| 2009-11-13 | 2009-11-15 |

| 2009-11-19 | NULL |

The link above shows how to make this query in SQL Server and can be applied to Oracle and PostgreSQL 8.4 as well.

However, the query in question relies on FULL OUTER JOIN and ROW_NUMBER. MySQL lacks both of these.

To implement this query in MySQL we need to use a certain workaround.

Let's create a sample table:

Read the rest of this entry »

SQL Server: clustered index and ordering

From Stack Overflow:

My table (SQL Server 2008) has more than 1 million records.

When I try to order records by

datetime, it takes 1 second, but when I order byid(integer), it only takes about 0.1 second.Is there any way to improve the efficiency?

The obvious answer would be create an index on

datetime

However, everything's not so simple.

Let's create a sample table and see why:

Read the rest of this entry »

MySQL: average of sums using indexes

Comments enabled. I *really* need your comment

From Stack Overflow:

My table looks like:

person_id car_id miles 1 1 100 1 2 200 2 3 1000 2 4 500 I need to total the miles for each person and then average those totals.

This is a good question to demonstrate how to improve MySQL queries using covering indexes.

The query should return a single value, which is SUM(miles) / COUNT(DISTINCT person_id). Since this query does not relate the fields to each other (only total sum and total count matter), it could be performed using only the indexes on miles and person_id. However, MySQL is not able to do this by default and it requires a little help from developer's side.

Let's create a sample table:

This table has 1,000,000 rows with 1,000 distinct person_id's. There are indexes on miles and person_id.

A plain query would look like this:

SELECT SUM(miles) / COUNT(DISTINCT person_id) AS total FROM persons

| total | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 4998778.6000 | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| 1 row fetched in 0.0001s (2.1468s) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| id | select_type | table | type | possible_keys | key | key_len | ref | rows | filtered | Extra |

|---|---|---|---|---|---|---|---|---|---|---|

| 1 | SIMPLE | persons | ALL | 1000066 | 100.00 |

select (sum(`20091110_indexes`.`persons`.`miles`) / count(distinct `20091110_indexes`.`persons`.`person_id`)) AS `total` from `20091110_indexes`.`persons`

The query takes more than 2 seconds.

If we look into the query plan we will see that a full table scan is used. The query needs both the values of person_id and miles and no index contains both values. MySQL browses all table records which are quite large.

But despite the fact that the query needs both values, it does not need to correspond the values. It may calculate the SUM first (using an index on miles), and COUNT(DISTINCT person_id) in another query. Since index records are much less in size that the table records, the separate aggregates on them will be calculated much faster.

And we can improve the query even more by replacing COUNT(DISTINCT person_id) with a COUNT over DISTINCT person_id in a subquery. The latter query will use INDEX FOR GROUP BY and therefore be almost instant.

Here's how the new improved query would look like:

SELECT SUM(miles) / ( SELECT COUNT(*) FROM ( SELECT DISTINCT person_id FROM persons ) q ) AS total FROM persons

| total | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 4998778.6000 | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| 1 row fetched in 0.0001s (0.7344s) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| id | select_type | table | type | possible_keys | key | key_len | ref | rows | filtered | Extra |

|---|---|---|---|---|---|---|---|---|---|---|

| 1 | PRIMARY | persons | index | ix_persons_miles | 4 | 1000066 | 100.00 | Using index | ||

| 2 | SUBQUERY | Select tables optimized away | ||||||||

| 3 | DERIVED | persons | range | ix_persons_person | 4 | 2021 | 100.00 | Using index for group-by |

select (sum(`20091110_indexes`.`persons`.`miles`) / (select count(0) AS `COUNT(*)` from (select distinct `20091110_indexes`.`persons`.`person_id` AS `person_id` from `20091110_indexes`.`persons`) `q`)) AS `total` from `20091110_indexes`.`persons`

This query uses separate indexes to calculate the aggregate values, and by using INDEX FOR GROUP-BY, COUNT(DISTINCT person_id) is calculated almost instantly.

Overall query performance is 750 ms, or thrice as fast as the original query.

Inverting date ranges

Comments enabled. I *really* need your comment

From Stack Overflow:

In an SQL table I keep bookings for various resouces, with a

StartDate/EndDatecolumn:

ResourceID StartDate EndDate 1 2009-01-01 2009-01-05 1 2009-01-07 2009-01-10 2 2009-01-03 2009-01-18 A record means that the given resource is busy.

I need to produce a list of all resources that are available for at least X consecutive days in a given time interval, e. g. from 2009-01-01 to 2009-01-20 and 5 consecutive days.

This would be quite an easy task if the table records reflected available resources. We could just check that a resource exists using EXISTS and that it has given length using DATEDIFF.

However, the table reflects the busy resources. This means we should invert the date ranges: given a list of consecutive non-overlapping date ranges, we should build a list of their complements, or the gaps between them.

Here's how we do it.

First, we should build an ordered set of the date ranges. Since the ranges are not overlapping, the set can be ordered by any column (StartDate or EndDate), with the result being the same:

MySQL ORDER BY / LIMIT performance: late row lookups

From Stack Overflow:

When I run an SQL command like the one below, it takes more than 15 seconds:

SELECT * FROM news WHERE cat_id = 4 ORDER BY id DESC LIMIT 150000, 10

EXPLAINshows that its usingwhereand the index on(cat_id, id)

LIMIT 20, 10on the same query only takes several milliseconds.

This task can be reformulated like this: take the last 150,010 rows in

id order and return the first 10 of them

It means that though we only need 10 records we still need to count off the first 150,000.

The table has an index which keeps the records ordered. This allows us not to use a filesort. However, the query is still far from being efficient: 15 seconds for 150,000 records (which are already ordered) is way too much.

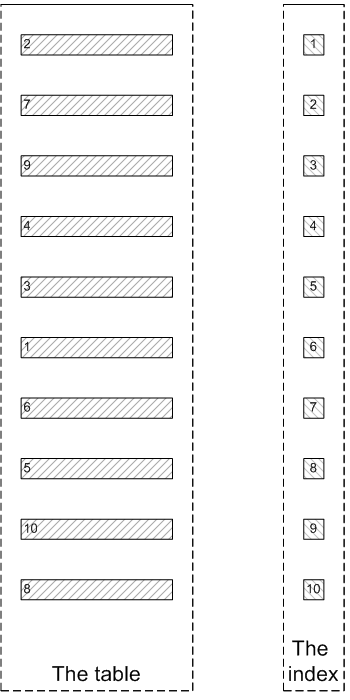

To better understand the reason behind the low performance let's look into this picture:

As we already said before, there is an index created on the table. Logically, an index is a part of a table which is not even visible from the SQL side: all queries are issued against the table, not the index, and the optimizer decides whether to use the index or not.

However, physically, an index is a separate object in the database.

An index is a shadow copy of the table which stores some subset of the table's data:

- Index key, i. e. the columns which the index created on.

- Table pointer, that is some value that uniquely identifies a row the record reflects. In InnoDB, it is the value of the

PRIMARY KEY; in MyISAM, it is an offset in the.MYDfile.

The index records are stored in a B-Tree structure which make the ref and range searching on them super fast.

However, the index itself does not contain all table's data: only the subset we described above. To find the actual table values while preserving order, one needs to join the index and the table. That is for each index record the engine should find the corresponding table record (using the row pointer) and return all non-indexed values from the table itself.

Here's how it looks:

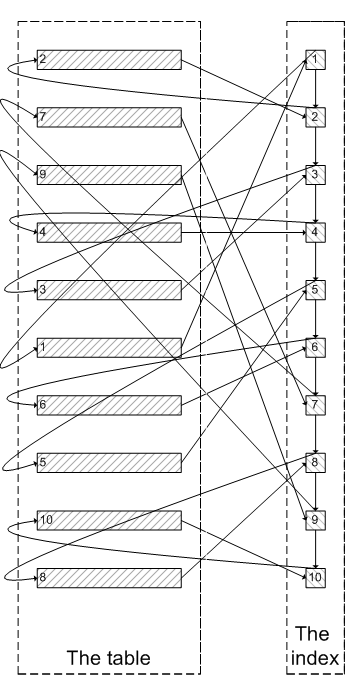

The process of fetching the table records corresponding to the index records is called row lookup. It is pictured by the curvy arrows connecting the index and the table.

Since the index records and the table records are located far away from each other in the memory and on the disk, the row lookup leads to much more page misses, cache misses and disk seeks that a sequential access and is therefore quite costly. It takes much time to traverse all the connectors on the picture above.

If we do a plain query which returns all the records we of course need to fetch all the records and each row lookup is necessary.

But do we really need it when we use a LIMIT clause with the offset greater than 0?

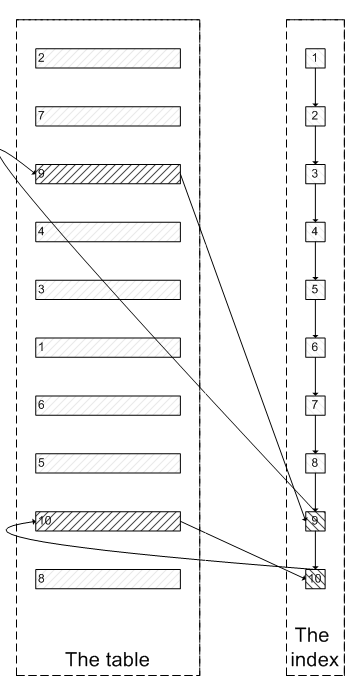

If we did something like LIMIT 8, 2 we could just skip the first 8 values using the index and the return the remaining two:

We see that this is a much more efficient algorithm that will save us lots of row lookups.

This is called late row lookup: the engine should look a row up only if there is no way to avoid it. If there is a chance that a row will be filtered out by using the indexed fields only, it should be done before the rows are looked up in the actual MySQL table. There is no point in fetching the records out of the table just to discard them.

However, MySQL always does early row lookup: it searches for a row prior to checking values in the index, even the optimizer decided to use the index.

Let's create a sample table and try to reproduce this behavior:

CREATE TABLE filler (

id INT NOT NULL PRIMARY KEY AUTO_INCREMENT

) ENGINE=Memory;

CREATE TABLE t_limit (

id INT NOT NULL,

value VARCHAR(20) NOT NULL,

stuffing VARCHAR(200) NOT NULL,

CONSTRAINT pk_limit_id PRIMARY KEY (id)

) ENGINE=MyISAM DEFAULT CHARSET=utf8;

DELIMITER $$

CREATE PROCEDURE prc_filler(cnt INT)

BEGIN

DECLARE _cnt INT;

SET _cnt = 1;

WHILE _cnt <= cnt DO

INSERT

INTO filler

SELECT _cnt;

SET _cnt = _cnt + 1;

END WHILE;

END

$$

DELIMITER ;

START TRANSACTION;

CALL prc_filler(200000);

COMMIT;

INSERT

INTO t_limit

SELECT id, CONCAT('Value ', id), RPAD('', 200, '*')

FROM filler;

This MyISAM table contains 200,000 records and has a PRIMARY KEY index on id. Each record is filled with 200 bytes of stuffing data.

Here's the query to select values from 150,001 to 150,010:

SELECT id, value, LENGTH(stuffing) AS len

FROM t_limit

ORDER BY

id

LIMIT 150000, 10

This query works for almost 6 seconds which is way too long.

It, however, uses a filesort which the optimizer considered more efficient than using the index. This would make sense if not for the stuffing field which is too long to be sorted efficiently. In this very case traversing the index would be faster.

Let's try to force the index:

SELECT id, value, LENGTH(stuffing) AS len

FROM t_limit FORCE INDEX (PRIMARY)

ORDER BY

id

LIMIT 150000, 10

Now it is only 1.23 seconds but still too long due to the early row lookups.

We, however, can trick MySQL to use the late row lookups.

We will only select the id in the subquery with an ORDER BY and LIMIT and then join the original table back on id.

This will make each individual row lookup less efficient, since each join will require looking up the index value again. However, this is not much of a deal, and the total number of lookups will be reduced greatly, so overall performance increase is expected:

SELECT l.id, value, LENGTH(stuffing) AS len

FROM (

SELECT id

FROM t_limit

ORDER BY

id

LIMIT 150000, 10

) o

JOIN t_limit l

ON l.id = o.id

ORDER BY

l.id

This is only 75 ms, or 16 times as fast as the previous query.

Note that we put an additional ORDER BY to the end of the query, since the order is not guaranteed to be preserved after the join. This resulted in an extra filesort in the plan. However, the actual plan used outputs the values already sorted and this filesort, therefore, will require only a single pass over 10 values which is instant.

Generating row placeholders: SQL Server

Comments enabled. I *really* need your comment

Answering questions asked on the site.

Vladimir asks:

I have two tables: one contains document headers, the other one contains document items.

The document header defines how many items should be in this document (usually 10 or 15, but this can vary).

I need to generate a list of documents and a list of document items to feed them to a third party control. Normally, this could be easily achieved by doing a

SELECT *from both tables, but there is a problem. The control requires that the number of items for each document is equal to the value of the columns in the document header.That is, if document header says 15 items, there should be exactly 15 records for this document in the items rowset, even if there are less items in the actual table. It's OK if the extra items have a value of NULL.

I tried to use

LEFT JOINandFULL JOINbut cannot figure out how to use them.

This is quite a common problem in SQL world.

SQL as such has no means to generate sets, only to transform them. In classical SQL, if we have a relation that contains 2 records on input and need to have a relation that contains 16 records on output, then we will need to do at least 4 cross joins — just to have enough rows to begin with.

There are two solutions to that problem.

First is having a dummy rowset handy: create a table of 1,000,000 records or use some kind of a system table whose only purpose is to provide enough rows (the actual values of them can be completely ignored).

Second solution is to generate rows on the fly using system-provided means. This is impossible in MySQL, however, all other major systems can do it using generate_series (PostgreSQL, the only system that has a native tool specially for this purpose); CONNECT BY query (Oracle); or, in your case, a recursive CTE (SQL Server).

Recursive queries are somewhat less efficient than stored tables, since they require looping and therefore having much logical reads, while reading from a dummy table requires but a few table scans.

However, the recursive queries also have several advantages:

- They are always available, even if you querying a database that lacks a dummy table and you don't have a write access to the database

- They always have enough records

- They have consistent plans. If the dummy table contains lots of records and the optimizer chooses a fullscan, this can kill performance

That's why I'll base my solution on the recursive CTE.

The main idea to generate a list of document items is simple:

- Each document header we should replicate exactly as many times as the header says (using a recursive CTE), with an ordinal number

- We also should assign an ordinal number to each document item, using

ROW_NUMBER() - Finally, we should just left join the two resultsets

The query will look like this:

Read the rest of this entry »

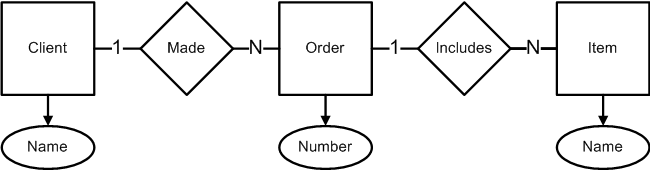

What is entity-relationship model?

Image by Samuel Mann

A relational database, as we all know from the previous article, stores relations between integers, strings and other simple types in a very plain way: it just enumerates all values related.

This model is extremely flexible, since other relations can be easily derived from existing ones using mathematical formulae, and the relational database takes care of that.

However, database should reflect the real world in some way to be really of use. Since the relational databases store relations between mathematical abstractions and not real world things, we should make some kind of a mapping of ones to the others.

This is what entity-relationship model is for.

An entity-relationship model, as one can easily guess from its name, models relationships between entities.

But since we know that databases do essentially the same, how does it differ from the database model?

- An entity-relationship model states which data and relations between them should be stored

- A database model states how these relations are stored

In other words, ER model is design and database model is one of the ways to implement it. ER model is said to be above the database model in the waterfall developement.

Note that in both cases I use the word stored above. The model says nothing of data and relations between them that may or should exist, only of those that should be stored. Every human being participates in thousands if not millions relationships, but an ER model should state which of them are to be stored, and a relational model should decide how to store them. Of course it would be nice to store them all (and police and insurance would be just happy), but the technology does not allow it yet. Don't know whether it is good or bad.

A typical entity-relationship diagram in Peter Chen's notation looks like this:

What does it mean?

Read the rest of this entry »

Subscribe in a reader

Subscribe in a reader